VictoriaMetrics 是一款快速、经济高效且可扩展的时间序列数据库。可用作 Prometheus 的长期远程存储。如果提取率低于每秒一百万个数据点,建议使用单节点版本,而不是集群版本。

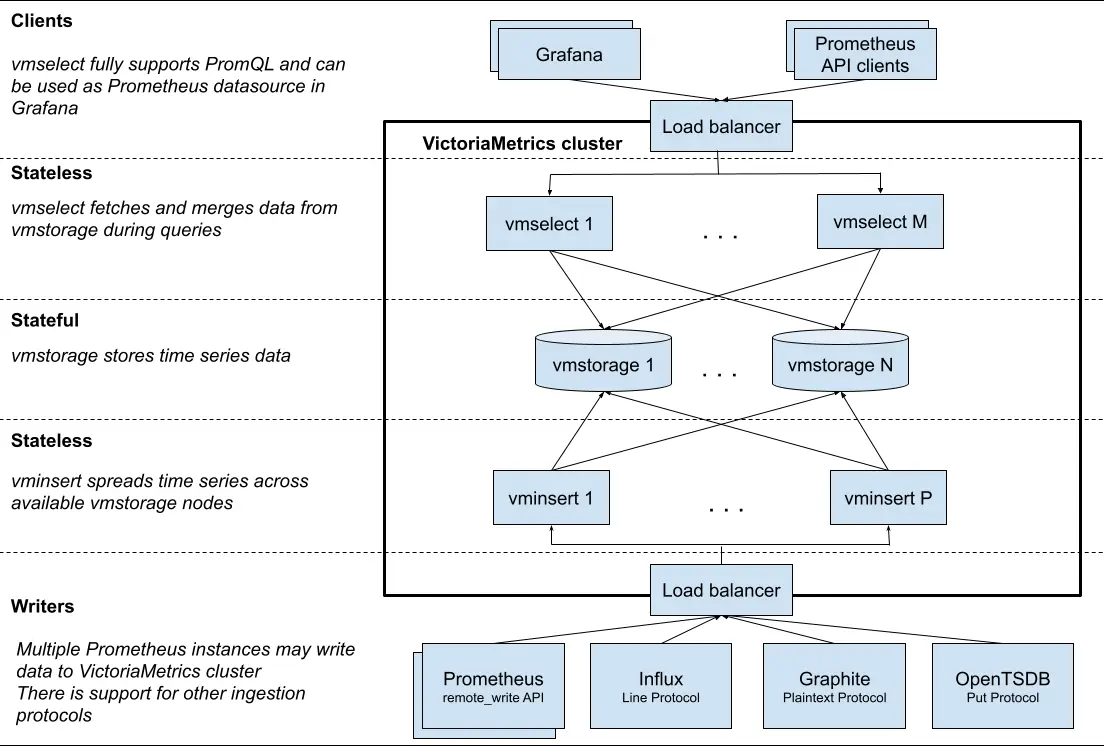

VictoriaMetrics集群由以下服务组成:

vmstorage 存储原始数据并返回给定标签过滤器在给定时间范围内的查询数据vminsert 接受采集的数据,并根据指标名称及其所有标签的一致性哈希,将其传播到 vmstorage 节点之间vmselect 通过从所有配置的 vmstorage 节点获取所需数据来执行传入查询vmalert 负责告警,和 Prometheus 一样支持纪录、告警两种规则配置与发送告警通知,允许在注解中使用 Go 模板来格式化数据、迭代或执行表达式,支持跨租户发送警报和记录规则vmagent 负责数据采集,重新标记和过滤收集到的指标,并通过 Prometheus 协议或通过 VictoriaMetrics 协议将它们存储在 VictoriaMetrics 或任何其他存储系统中。

部署参考文档:https://docs.victoriametrics.com/helm/victoriametrics-operator/ https://docs.victoriametrics.com/cluster-victoriametrics/ https://zhuanlan.zhihu.com/p/715516533

⚠️本文档中的操作均在本地进行,如需生产部署,请把port-forward转换为ingress转发,vmcluster调整合适的配置.

VictoriaMetrics Helm 存储库 ➜ ~ helm repo add vm https://victoriametrics.github.io/helm-charts/ "vm" has been added to your repositories➜ ~ helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "aliyun" chart repository ...Successfully got an update from the "vm" chart repository Update Complete. ⎈Happy Helming!⎈ ➜ ~ helm search repo vm/ NAME CHART VERSION APP VERSION DESCRIPTION vm/victoria-logs-single 0.8.2 v1.0.0 Victoria Logs Single version - high-performance... vm/victoria-metrics-agent 0.14.9 v1.106.1 Victoria Metrics Agent - collects metrics from ... vm/victoria-metrics-alert 0.12.6 v1.106.1 Victoria Metrics Alert - executes a list of giv... vm/victoria-metrics-anomaly 1.6.7 v1.18.4 Victoria Metrics Anomaly Detection - a service ... vm/victoria-metrics-auth 0.7.7 v1.106.1 Victoria Metrics Auth - is a simple auth proxy ... vm/victoria-metrics-cluster 0.14.12 v1.106.1 Victoria Metrics Cluster version - high-perform... vm/victoria-metrics-common 0.0.31 Victoria Metrics Common - contains shared templ... vm/victoria-metrics-distributed 0.5.0 v1.106.1 A Helm chart for Running VMCluster on Multiple ... vm/victoria-metrics-gateway 0.5.7 v1.106.1 Victoria Metrics Gateway - Auth & Rate-Limittin... vm/victoria-metrics-k8s-stack 0.28.4 v1.106.1 Kubernetes monitoring on VictoriaMetrics stack.... vm/victoria-metrics-operator 0.38.0 v0.49.1 Victoria Metrics Operator vm/victoria-metrics-single 0.12.7 v1.106.1 Victoria Metrics Single version - high-performa...

安装 VictoriaMetrics-Operator ➜ ~ helm install vmo vm/victoria-metrics-operator NAME: vmo LAST DEPLOYED: Tue Dec 24 14:38:21 2024 NAMESPACE: default STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: victoria-metrics-operator has been installed. Check its status by running: kubectl --namespace default get pods -l "app.kubernetes.io/instance=vmo" Get more information on https://github.com/VictoriaMetrics/helm-charts/tree/master/charts/victoria-metrics-operator. See "Getting started guide for VM Operator" on https://docs.victoriametrics.com/guides/getting-started-with-vm-operator ➜ ~ kubectl get pods -A | grep 'vmo' default vmo-victoria-metrics-operator-54bf97548f-fj8j7 1/1 Running 0 1m

安装VictoriaMetrics Cluster Operator 会根据我们的定义去部署集群,可以通过 kubectl explain VMCluster.spec 来查看对象可配置的属性

vmcluster.yaml apiVersion: operator.victoriametrics.com/v1beta1 kind: VMCluster metadata: name: vmcluster namespace: victoria-metrics spec: retentionPeriod: "1w" vmstorage: replicaCount: 2 storageDataPath: /vm-data storage: volumeClaimTemplate: spec: storageClassName: cbs resources: requests: storage: 10Gi resources: limits: cpu: "0.5" memory: 500Mi vmselect: replicaCount: 2 cacheMountPath: "/select-cache" storage: volumeClaimTemplate: spec: storageClassName: cbs resources: requests: storage: "2Gi" resources: limits: cpu: "0.3" memory: "300Mi" vminsert: replicaCount: 2

注意:

开启replicationFactor,vmagent会产生replicationFactor份冗余数据,需要dedup.minScrapeInterval参数,来去除重复数据。

开启或修改replicationFactor,可能造成数据部分丢失。

➜ ~ kubectl apply -f vmcluster.yaml vmcluster.operator.victoriametrics.com/vmcluster created ➜ ~ kubectl get pods NAME READY STATUS RESTARTS AGE vminsert-vmcluster-8587774678-2xxt6 1/1 Running 0 12s vminsert-vmcluster-8587774678-mplrr 1/1 Running 0 12s vmo-victoria-metrics-operator-5d4ff4d8f4-gk4gj 1/1 Running 0 3m58s vmselect-vmcluster-0 1/1 Running 0 17s vmselect-vmcluster-1 1/1 Running 0 17s vmstorage-vmcluster-0 1/1 Running 0 23s vmstorage-vmcluster-1 1/1 Running 0 23s ➜ ~ kubectl get vmclusters NAME INSERT COUNT STORAGE COUNT SELECT COUNT AGE STATUS vmcluster 2 2 2 63s operational ➜ ~ kubectl get svc | grep vminsert vminsert-vmcluster ClusterIP 10.105.160.47 <none> 8480/TCP 6m49s

vmagent.yaml apiVersion: operator.victoriametrics.com/v1beta1 kind: VMAgent metadata: name: vmagent spec: serviceScrapeNamespaceSelector: {} podScrapeNamespaceSelector: {} podScrapeSelector: {} serviceScrapeSelector: {} nodeScrapeSelector: {} nodeScrapeNamespaceSelector: {} staticScrapeSelector: {} staticScrapeNamespaceSelector: {} replicaCount: 1 remoteWrite: - url: "http://vminsert-vmcluster.default.svc.cluster.local:8480/insert/0/prometheus/api/v1/write"

VMAgent的remoteWrite.url 由以下部分组成:

“service_name.VMCluster_namespace.svc.kubernetes_cluster_domain”

➜ ~ kubectl apply -f vmagent.yaml vmagent.operator.victoriametrics.com/vmagent created ➜ ~ kubectl port-forward svc/vmagent-vmagent 8429:8429 Forwarding from 127.0.0.1:8429 -> 8429 Forwarding from [::1]:8429 -> 8429 Handling connection for 8429 Handling connection for 8429

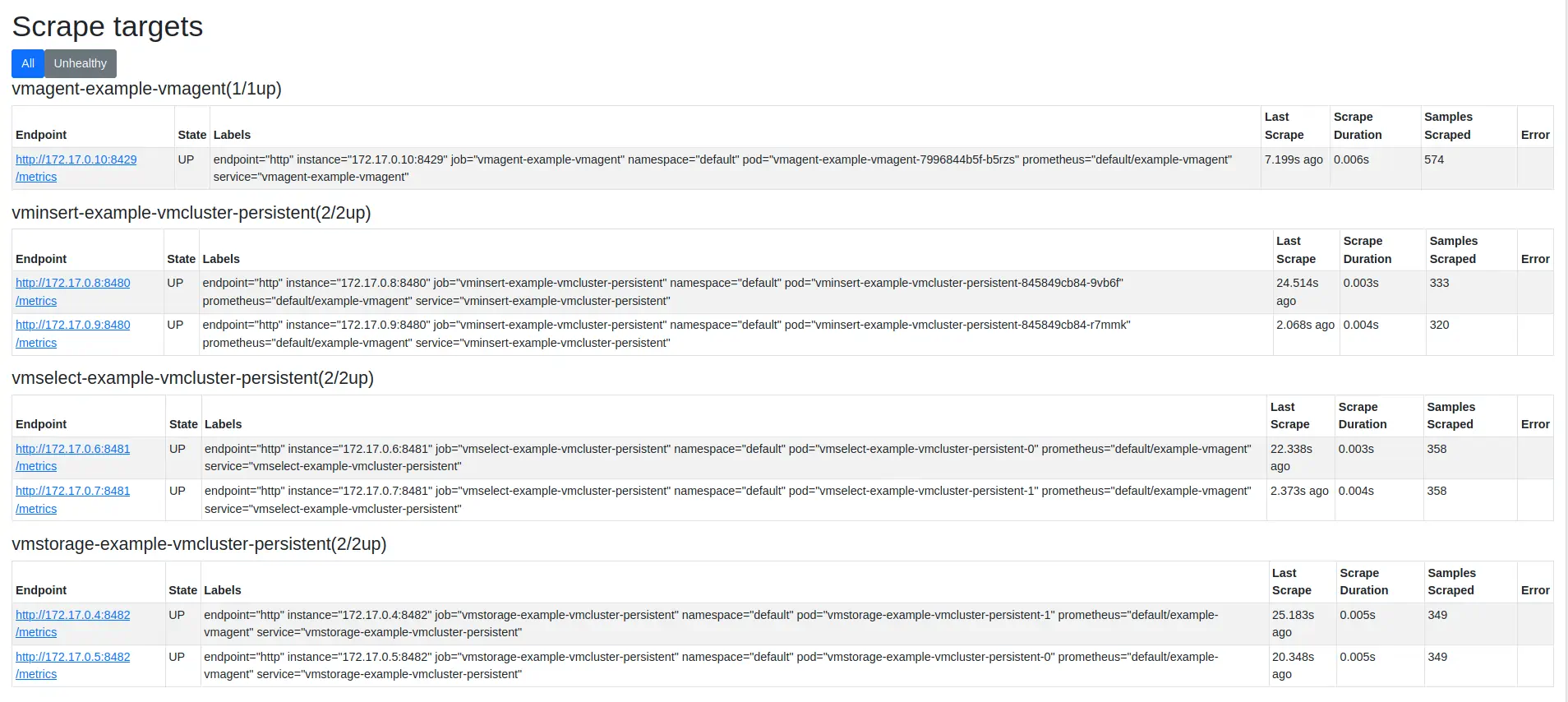

在浏览器中打开http://127.0.0.1:8429/targets 查看VMAgent是否从 K8s 集群收集指标

验证 VictoriaMetrics Cluster 获取vmselect服务名

➜ ~ kubectl get svc | grep vmselect vmselect-vmcluster ClusterIP None <none> 8481/TCP 31m

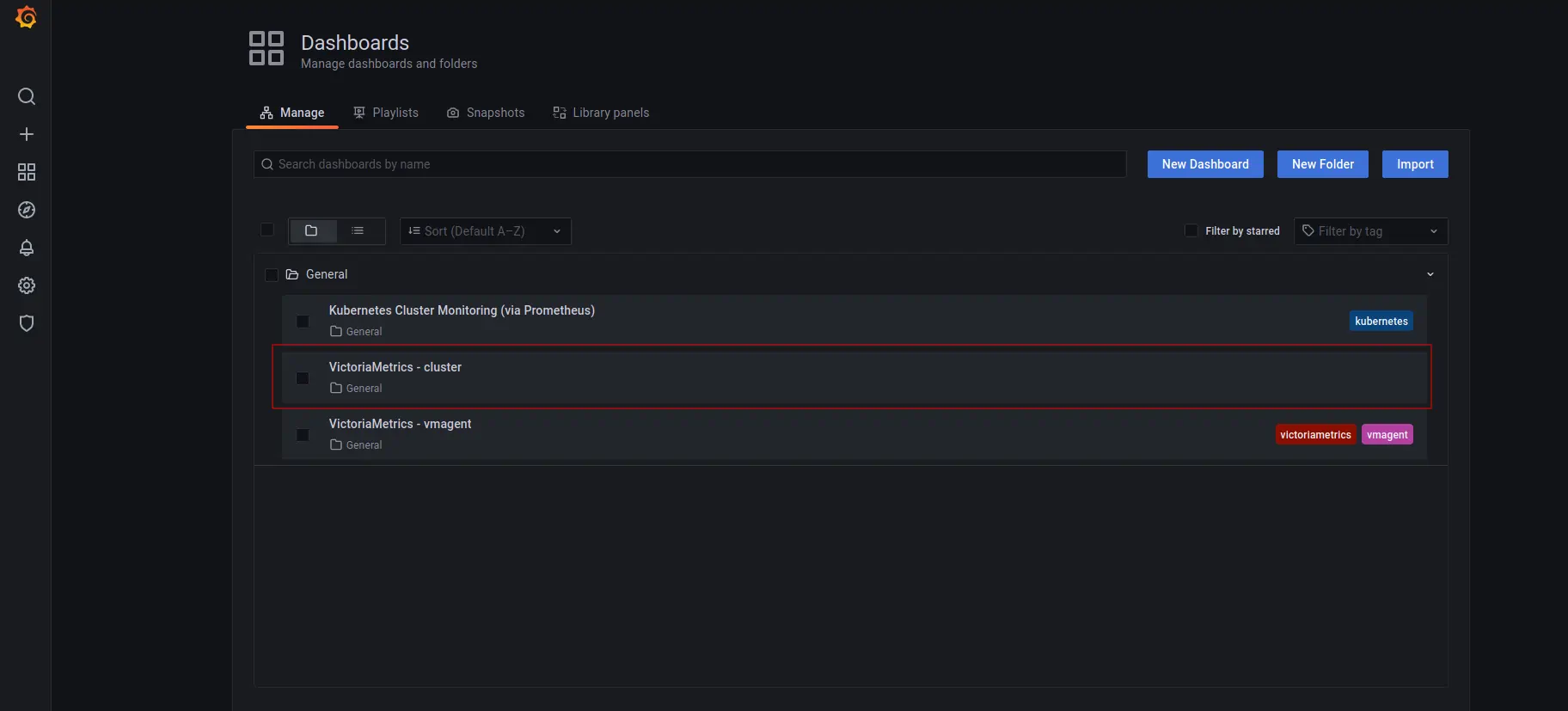

安装Grafana cat <<EOF | helm install grafana grafana/grafana -f - datasources: datasources.yaml: apiVersion: 1 datasources: - name: victoriametrics type: prometheus orgId: 1 url: http://vmselect-vmcluster.default.svc.cluster.local:8481/select/0/prometheus/ access: proxy isDefault: true updateIntervalSeconds: 10 editable: true dashboardProviders: dashboardproviders.yaml: apiVersion: 1 providers: - name: 'default' orgId: 1 folder: '' type: file disableDeletion: true editable: true options: path: /var/lib/grafana/dashboards/default dashboards: default: victoriametrics: gnetId: 11176 revision: 18 datasource: victoriametrics vmagent: gnetId: 12683 revision: 7 datasource: victoriametrics kubernetes: gnetId: 14205 revision: 1 datasource: victoriametrics EOF

浏览器验证 grafana ➜ ~ kubectl get secret --namespace default grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo wVj5Rdl1BuPClRy5qgxrztsaoE8v0e4m05DU1cUW ➜ ~ export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=grafana,app.kubernetes.io/instance=grafana" -o jsonpath="{.items[0].metadata.name}" ) ➜ ~ kubectl --namespace default port-forward $POD_NAME 3000 Forwarding from 127.0.0.1:3000 -> 3000 Forwarding from [::1]:3000 -> 3000

访问:http://localhost:3000

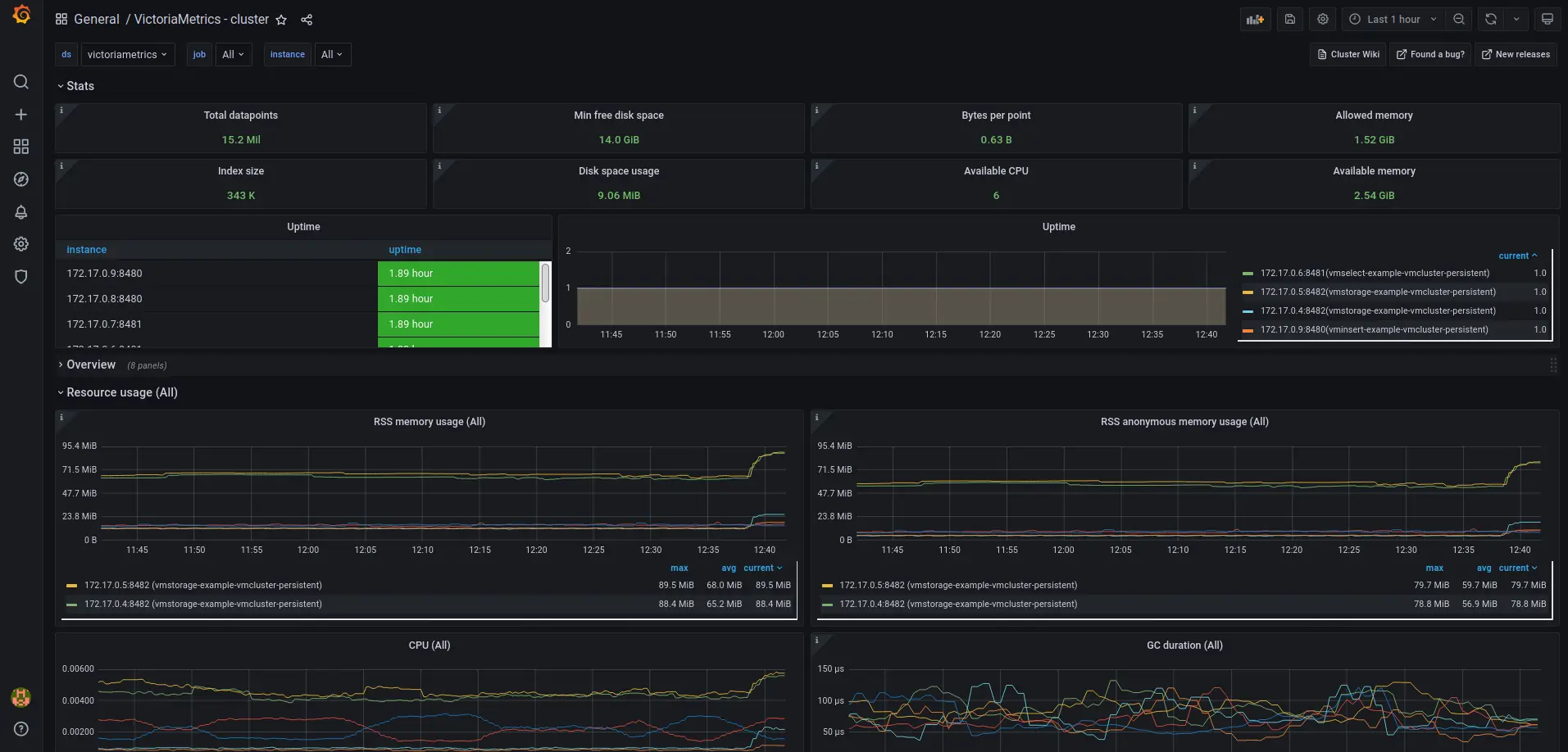

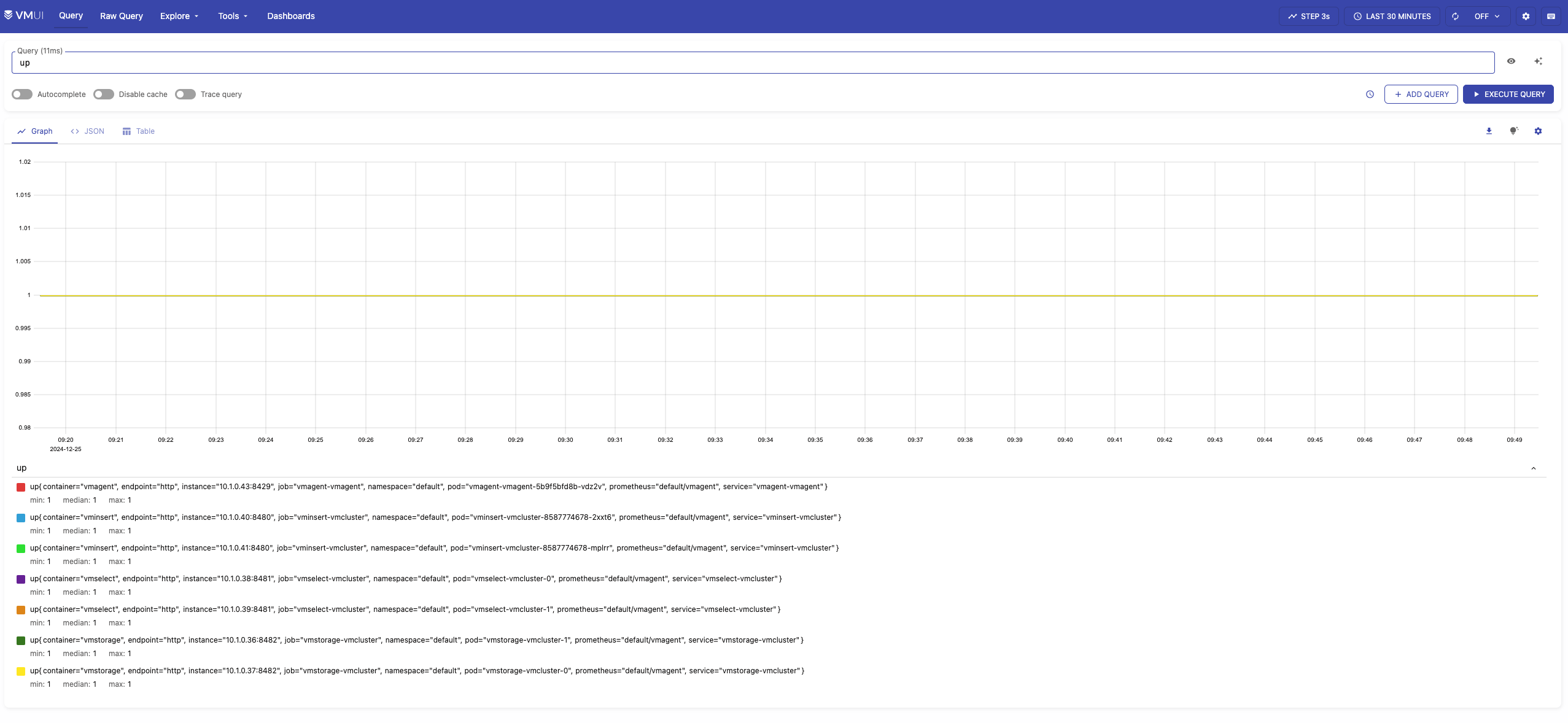

vmui VictoriaMetrics Cluster提供了用于查询故障排除和探索的 UI

➜ ~ kubectl port-forward svc/vmselect-vmcluster 8481:8481 Forwarding from 127.0.0.1:8481 -> 8481 Forwarding from [::1]:8481 -> 8481

访问:http://localhost:8481/select/0/vmui/

最佳实践 监控jvm 在dce集群创建命名空间 ➜ ~ kubectl create ns monitor namespace/monitor created ➜ ~ kubectl version --short Flag --short has been deprecated, and will be removed in the future. The --short output will become the default. Client Version: v1.24.0 Kustomize Version: v4.5.4 Server Version: v1.18.20 WARNING: version difference between client (1.24) and server (1.18) exceeds the supported minor version skew of +/-1

在monitor命名空间中创建serviceaccount: ServiceAccount.yaml

apiVersion: v1 kind: ServiceAccount metadata: labels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 1.18 .20 name: prometheus-k8s namespace: monitor

创建secret,会自动创建token (k8s在1.24版本后创建serviceaccount不会自动创建绑定secret,需要事先创建secret来绑定到serviceaccount)

apiVersion: v1 kind: Secret metadata: annotations: kubernetes.io/service-account.name: prometheus-k8s name: prometheus-k8s-secret namespace: monitor type: kubernetes.io/service-account-token

创建cluster role ClusterRole.yaml

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 1.18 .20 name: prometheus-k8s namespace: monitor rules: - apiGroups: - "" resources: - nodes - nodes/metrics - services - endpoints - pods verbs: - get - list - watch

授权给serviceaccount ClusterRoleBinding.yaml

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: app.kubernetes.io/component: prometheus app.kubernetes.io/instance: k8s app.kubernetes.io/name: prometheus app.kubernetes.io/part-of: kube-prometheus app.kubernetes.io/version: 1.18 .20 name: prometheus-k8s namespace: monitor roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus-k8s subjects: - kind: ServiceAccount name: prometheus-k8s namespace: monitor

在vm集群中创建好dce集群的comfigmap并部署 ➜ ~ kubectl get secret prometheus-k8s-secret -n monitor -o yaml | grep token token: ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNkltUnVibTFSYUVkbVdtTlBUR2hzZHpVd1dEZE9UVWhhZFdKdlJVZHpaMDV2Y1hOcmFqTnNTM1ZVYUhNaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUp0YjI1cGRHOXlJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpXTnlaWFF1Ym1GdFpTSTZJbkJ5YjIxbGRHaGxkWE10YXpoekxYTmxZM0psZENJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExtNWhiV1VpT2lKd2NtOXRaWFJvWlhWekxXczRjeUlzSW10MVltVnlibVYwWlhNdWFXOHZjMlZ5ZG1salpXRmpZMjkxYm5RdmMyVnlkbWxqWlMxaFkyTnZkVzUwTG5WcFpDSTZJak5rTWpJd01qQm1MVEZsTXpjdE5EaGxaQzFpTVRkbExUazVOMlEwWVRSbVpUUTVOU0lzSW5OMVlpSTZJbk41YzNSbGJUcHpaWEoyYVdObFlXTmpiM1Z1ZERwdGIyNXBkRzl5T25CeWIyMWxkR2hsZFhNdGF6aHpJbjAuaVFGRjI3N3U5MGEtODR4aGR0dXVPeUdvWWdZRGpTNWJOQkhEVm1lSUZ3blVqMy1zY3U1RkJfTjhjYVc3d1JhUXNjaDN6Tk0tbWwwSVZUbXRSNjVFREZxVTNiRV85SGlKS1R1UlFfb2NUTVRjQUJKT3I5YnotY19IMFNsSlJlSUN6dlVlM0M1SUxhV2JERkJSRGxfVlRKTXBhVjMxZTlacXN6YTVMRGE5MkJDYldoaldzd2tOR1pSOUppeFVwTW1Mb2dKZFN2Nm0wcjFxelQwU2NjeEw2STRSa2JEUkhDZ1p5ZlhKc1Y3T1d5cmdoT0dwX2FJYTdJUHItbC00dEV0cGhoTFd4MjJMTWFFOW5odkJCZ2dHUVR4a2VDcEY1MWRjSzAtQ3phTjNWV1htckNHajJyT1BzTXdzaVdsOHJ3bk1BdmhYdmVaSGRCVFYwT2dQYkVkWXF3 {"apiVersion" :"v1" ,"kind" :"Secret" ,"metadata" :{"annotations" :{"kubernetes.io/service-account.name" :"prometheus-k8s" },"name" :"prometheus-k8s-secret" ,"namespace" :"monitor" },"type" :"kubernetes.io/service-account-token" } type : kubernetes.io/service-account-token➜ ~ echo "ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNkltUnVibTFSYUVkbVdtTlBUR2hzZHpVd1dEZE9UVWhhZFdKdlJVZHpaMDV2Y1hOcmFqTnNTM1ZVYUhNaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUp0YjI1cGRHOXlJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpXTnlaWFF1Ym1GdFpTSTZJbkJ5YjIxbGRHaGxkWE10YXpoekxYTmxZM0psZENJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExtNWhiV1VpT2lKd2NtOXRaWFJvWlhWekxXczRjeUlzSW10MVltVnlibVYwWlhNdWFXOHZjMlZ5ZG1salpXRmpZMjkxYm5RdmMyVnlkbWxqWlMxaFkyTnZkVzUwTG5WcFpDSTZJak5rTWpJd01qQm1MVEZsTXpjdE5EaGxaQzFpTVRkbExUazVOMlEwWVRSbVpUUTVOU0lzSW5OMVlpSTZJbk41YzNSbGJUcHpaWEoyYVdObFlXTmpiM1Z1ZERwdGIyNXBkRzl5T25CeWIyMWxkR2hsZFhNdGF6aHpJbjAuaVFGRjI3N3U5MGEtODR4aGR0dXVPeUdvWWdZRGpTNWJOQkhEVm1lSUZ3blVqMy1zY3U1RkJfTjhjYVc3d1JhUXNjaDN6Tk0tbWwwSVZUbXRSNjVFREZxVTNiRV85SGlKS1R1UlFfb2NUTVRjQUJKT3I5YnotY19IMFNsSlJlSUN6dlVlM0M1SUxhV2JERkJSRGxfVlRKTXBhVjMxZTlacXN6YTVMRGE5MkJDYldoaldzd2tOR1pSOUppeFVwTW1Mb2dKZFN2Nm0wcjFxelQwU2NjeEw2STRSa2JEUkhDZ1p5ZlhKc1Y3T1d5cmdoT0dwX2FJYTdJUHItbC00dEV0cGhoTFd4MjJMTWFFOW5odkJCZ2dHUVR4a2VDcEY1MWRjSzAtQ3phTjNWV1htckNHajJyT1BzTXdzaVdsOHJ3bk1BdmhYdmVaSGRCVFYwT2dQYkVkWXF3" |base64 --decode

把上面base64 解密后文件写在如下 token里

apiVersion: v1 data: token: | eyJhbGciOiJSUzI1NiIsImtpZCI6ImRubm1RaEdmWmNPTGhsdzUwWDdOTUhadWJvRUdzZ05vcXNrajNsS3VUaHMifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InByb21ldGhldXMtazhzLXNlY3JldCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJwcm9tZXRoZXVzLWs4cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjNkMjIwMjBmLTFlMzctNDhlZC1iMTdlLTk5N2Q0YTRmZTQ5NSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yOnByb21ldGhldXMtazhzIn0.iQFF277u90a-84xhdtuuOyGoYgYDjS5bNBHDVmeIFwnUj3-scu5FB_N8caW7wRaQsch3zNM-ml0IVTmtR65EDFqU3bE_9HiJKTuRQ_ocTMTcABJOr9bz-c_H0SlJReICzvUe3C5ILaWbDFBRDl_VTJMpaV31e9Zqsza5LDa92BCbWhjWswkNGZR9JixUpMmLogJdSv6m0r1qzT0SccxL6I4RkbDRHCgZyfXJsV7OWyrghOGp_aIa7IPr-l-4tEtphhLWx22LMaE9nhvBBggGQTxkeCpF51dcK0-CzaN3VWXmrCGj2rOPsMwsiWl8rwnMAvhXveZHdBTV0OgPbEdYqw kind: ConfigMap metadata: name: dce-token-configmap

部署dce-token-configmap

➜ ~ kubectl apply -f dce-token-configmap.yaml configmap/dce-token-configmap created

在vmagent.yaml文件配置采集指标策略 apiVersion: operator.victoriametrics.com/v1beta1 kind: VMAgent metadata: name: vmagent spec: scrapeInterval: 20s replicaCount: 1 resources: requests: cpu: 4 memory: 8Gi limits: cpu: 4 memory: 8Gi volumeMounts: - mountPath: /var/run/secrets/kubernetes.io/serviceaccount/dce-token/ name: dce-token volumes: - configMap: defaultMode: 420 name: dce-token-configmap name: dce-token remoteWrite: - url: "http://vminsert-vmcluster.default.svc.cluster.local:8480/insert/0/prometheus/api/v1/write" inlineScrapeConfig: | - job_name: dce-jvm-exporter #是否尊重指标的时间戳 honor_timestamps: true #抓取指标的时间间隔 scrape_interval: 20s #抓取指标的超时时间 scrape_timeout: 30s #抓取的指标路径 metrics_path: /actuator/prometheus #抓取协议 scheme: http #指定认证的用户名和密码 basic_auth: username: 'actuator' password: 'actuator%2@nmk!@#' #跳过 TLS 证书验证 tls_config: insecure_skip_verify: true kubernetes_sd_configs: #Kubernetes API 服务器地址 - api_server: https://10.30.150.11:16443 #指定要发现的资源类型(endpoints,service,pod,node,ingress) role: pod #配置访问 Kubernetes API 的认证和 TLS 设置 bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/dce-token/token tls_config: insecure_skip_verify: true relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] separator: ; regex: "true" replacement: $1 action: keep - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] separator: ; regex: (.+) target_label: __metrics_path__ replacement: $1 action: replace - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] separator: ; regex: ([^:]+)(?::\d+)?;(\d+) target_label: __address__ replacement: $1:$2 action: replace - separator: ; regex: __meta_kubernetes_pod_label_(.+) replacement: $1 action: labelmap - source_labels: [__meta_kubernetes_namespace] separator: ; regex: (.*) target_label: kubernetes_namespace replacement: $1 action: replace - source_labels: [__meta_kubernetes_pod_name] separator: ; regex: (.*) target_label: kubernetes_pod_name replacement: $1 action: replace

relabel_configs: 过滤和重写目标标签

source_labels: 指定用于匹配的源标签列表

separator: 指定分隔符

regex: 用于匹配 source_labels 提取的值的正则表达式,默认为匹配任意值(.*)

replacement: 用于替换操作的值

action:定义了对标签的处理方式,包括:

replace:根据 regex 匹配 source_labels 的值,并将其写入 target_label

keep:仅保留匹配 regex 的标签,其余的将被丢弃

drop:丢弃匹配 regex 的标签,保留其余的

labelmap:根据 regex 匹配 Target 实例所有标签的名称,并以匹配到的内容为新的标签名称,其值作为新标签的值

labeldrop:移除匹配 regex 规则的标签

labelkeep:移除不匹配 regex 规则的标签,保留匹配的

要监控的Pod 中需要有以下注解才能正常采集

annotations: prometheus.io/path: /actuator/prometheus prometheus.io/port: "20001" prometheus.io/scrape: "true"

加载配置文件: ➜ ~ kubectl apply -f vmagent.yaml vmagent.operator.victoriametrics.com/vmagent configured

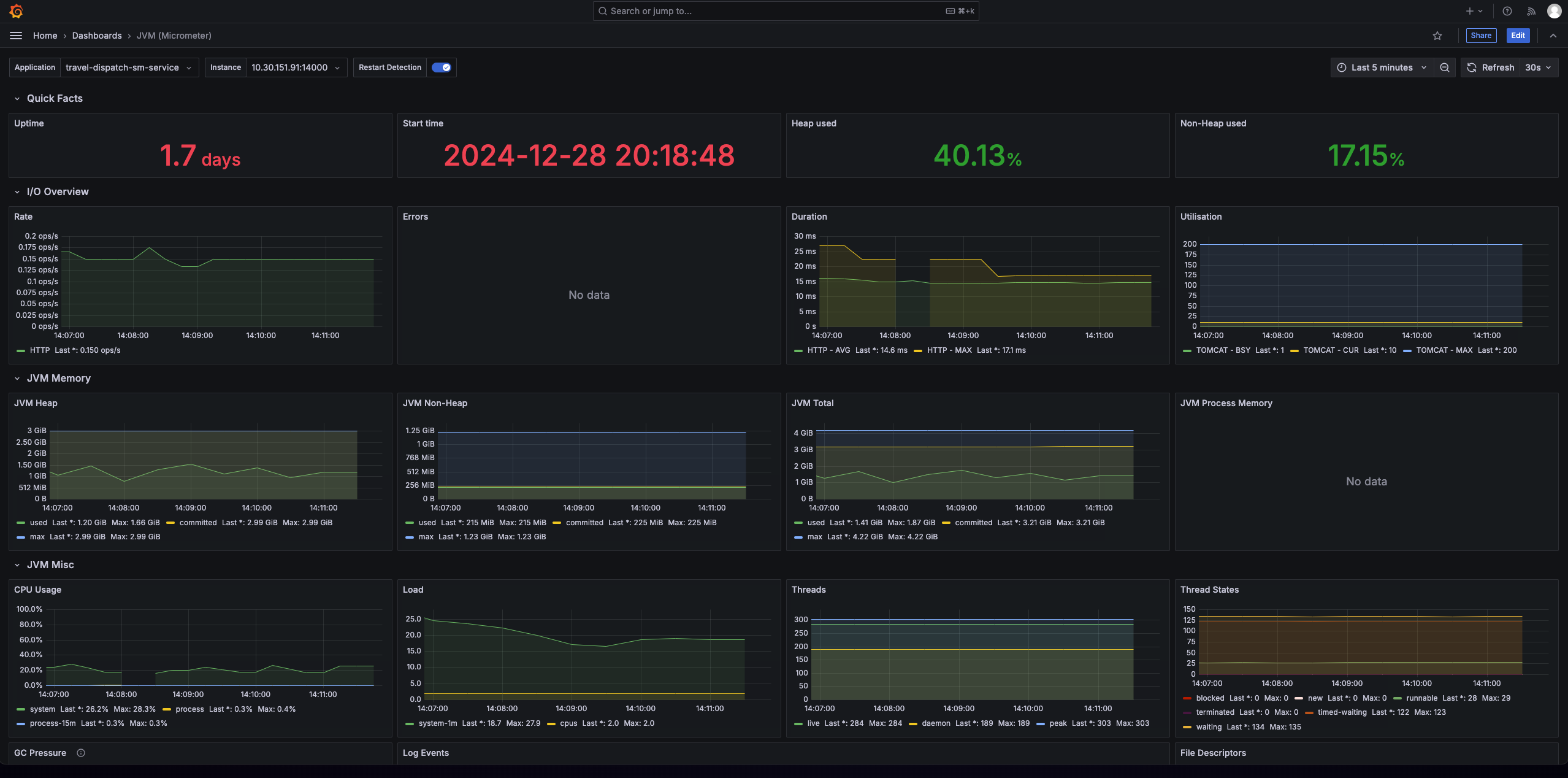

vmagent查看配置是否生效: Grafana查看面板 打开Grafana,在Dashboards中import面板ID:4701

监控k8s集群状态 kube-state-metrics 选择合适的版本,进行安装部署https://github.com/kubernetes/kube-state-metrics

采集外部集群需要把kube-state-metrics的service改为NodePort

- job_name: dce-kube-state-metrics honor_timestamps: true scrape_interval: 15s metrics_path: '/metrics' scheme: http static_configs: - targets: ['10.30.150.1:33987'] #指标过大时可使用 params: max_scrape_size: 33554432

cAdvisor ⚠️必须要两个bearer_token_file,一个认证apiserver,一个通过apiserver进行cAdvisor采集指标

- job_name: dce-cadvisor metrics_path: /metrics scheme: https bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/dce-token/token tls_config: insecure_skip_verify: true kubernetes_sd_configs: - api_server: https://10.30.150.11:16443 role: node bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/dce-token/token tls_config: insecure_skip_verify: true relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.*) - target_label: __address__ action: replace regex: (.*) source_labels: ["__address__"] replacement: 10.30.150.11:16443 - source_labels: [__meta_kubernetes_node_name] regex: (.*) action: replace target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor metric_relabel_configs: - source_labels: [instance] separator: ; regex: (.+) target_label: node replacement: $1 action: replace

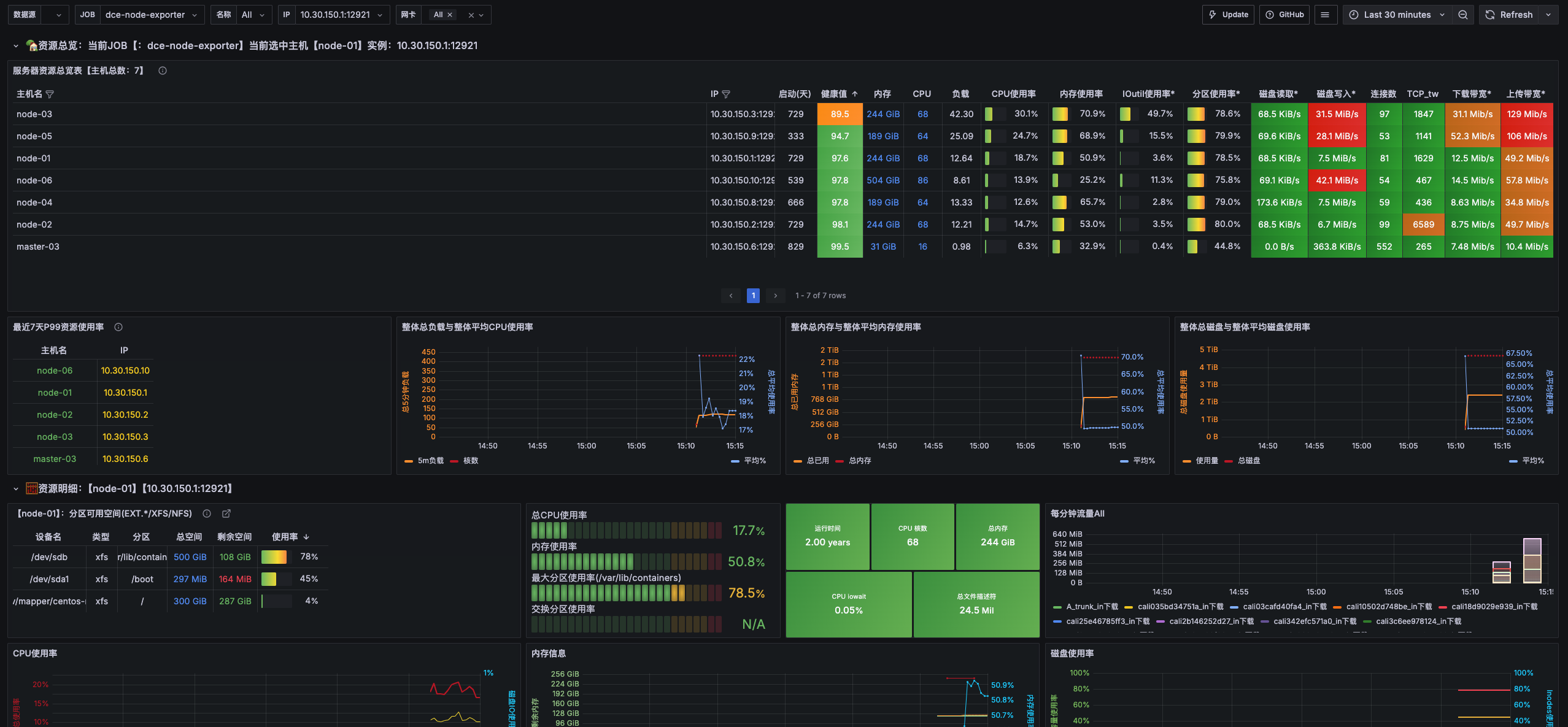

查看大盘

Node-Exporter 集群内部可通过VMNodeScrape资源对象进行配置,该资源对象不支持apiserver参数,所以无法采集外部集群的信息

apiVersion: operator.victoriametrics.com/v1beta1 kind: VMNodeScrape metadata: name: node-exporter spec: path: /metrics port: "9111" scrape_interval: 15s

集群外部通过Vmagent进行采集

- job_name: dce-node-exporter honor_timestamps: true scrape_interval: 15s metrics_path: '/metrics' scheme: http static_configs: - targets: ['10.30.150.1:12921','10.30.150.2:12921','10.30.150.3:12921','10.30.150.4:12921','10.30.150.5:12921','10.30.150.6:12921','10.30.150.8:12921','10.30.150.9:12921','10.30.150.10:12921']

查看大盘

Vmalert vmalert在内存中保存警报状态。重新启动该vmalert进程将重置内存中所有活动警报的状态。为防止vmalert在重新启动时丢失,通过-remote.write.url和-remote.read.url将其配置为将状态持久保存到远程数据库

安装vmalert和alert manager cat <<EOF | helm install vma vm/victoria-metrics-alert -f - server: datasource: url: http://vmselect-vmcluster.default.svc:8481/select/0/prometheus remote: write: url: http://vminsert-vmcluster.default.svc:8480/insert/0/prometheus read: url: http://vmselect-vmcluster.default.svc:8481/select/0/prometheus notifier: alertmanager: url: http://vma-victoria-metrics-alert-alertmanager.default.svc:9093 alertmanager: enabled: true EOF

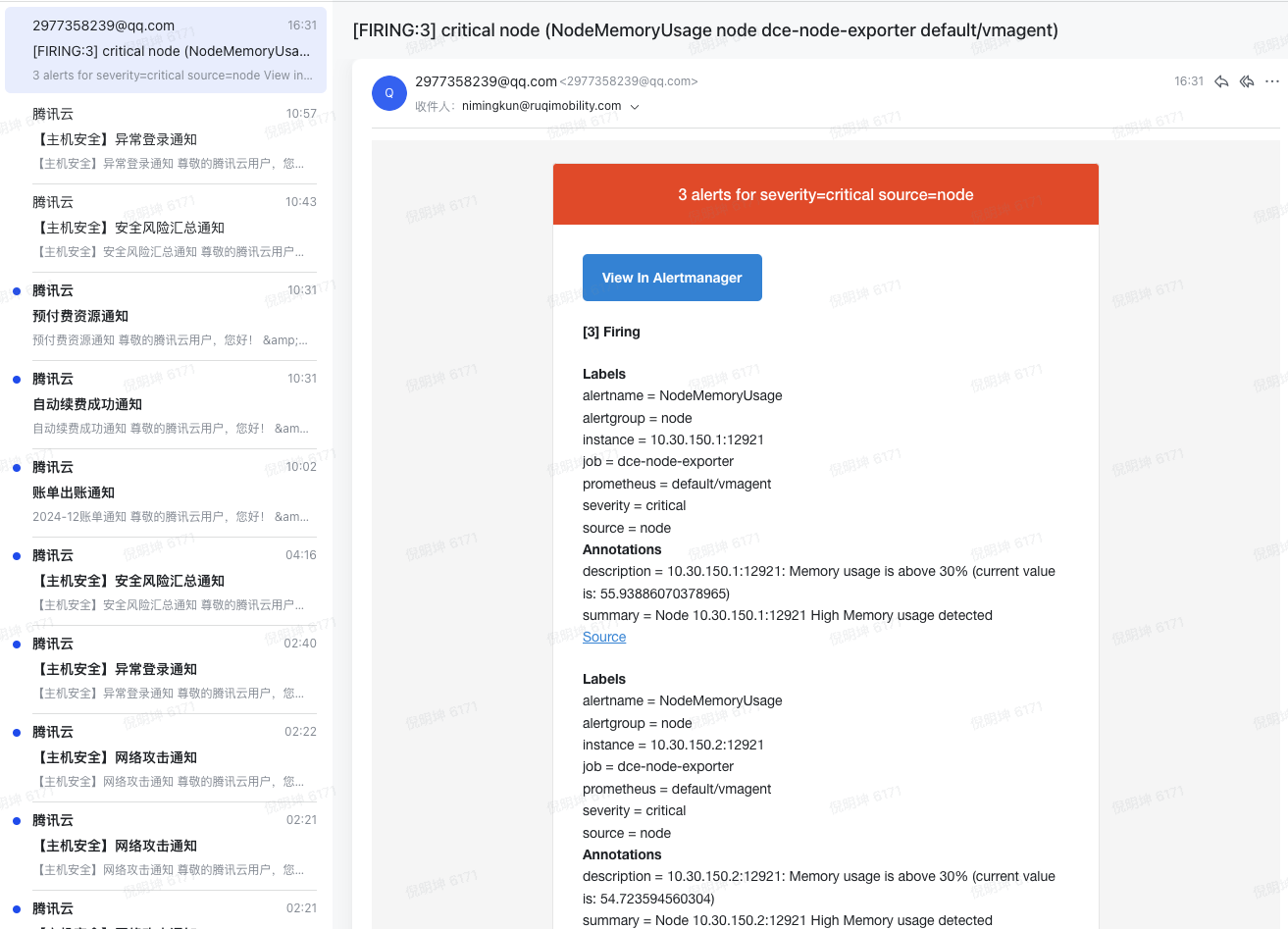

配置alert manager kubectl edit configmap vma-victoria-metrics-alert-alertmanager-config

global: smtp_smarthost: 'smtp.qq.com:465' smtp_from: '2977358239@qq.com' smtp_auth_username: '2977358239@qq.com' smtp_auth_password: 'jgigqzrlhycddhcf' # 这里是邮箱的授权密码,不是登录密码 smtp_require_tls: false templates: - '/alertmanager/template/*.tmpl' route: group_by: ['severity', 'source'] group_wait: 30s group_interval: 5m repeat_interval: 10m receiver: email receivers: - name: 'email' email_configs: - to: 'nimingkun@ruqimobility.com' send_resolved: true

配置vmalert kubectl edit configmap vma-victoria-metrics-alert-server-alert-rules-config

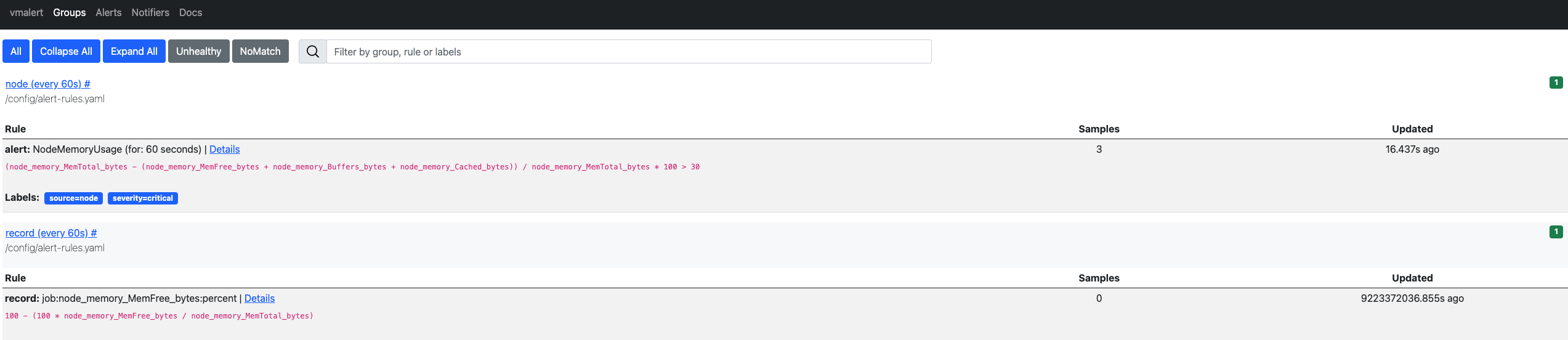

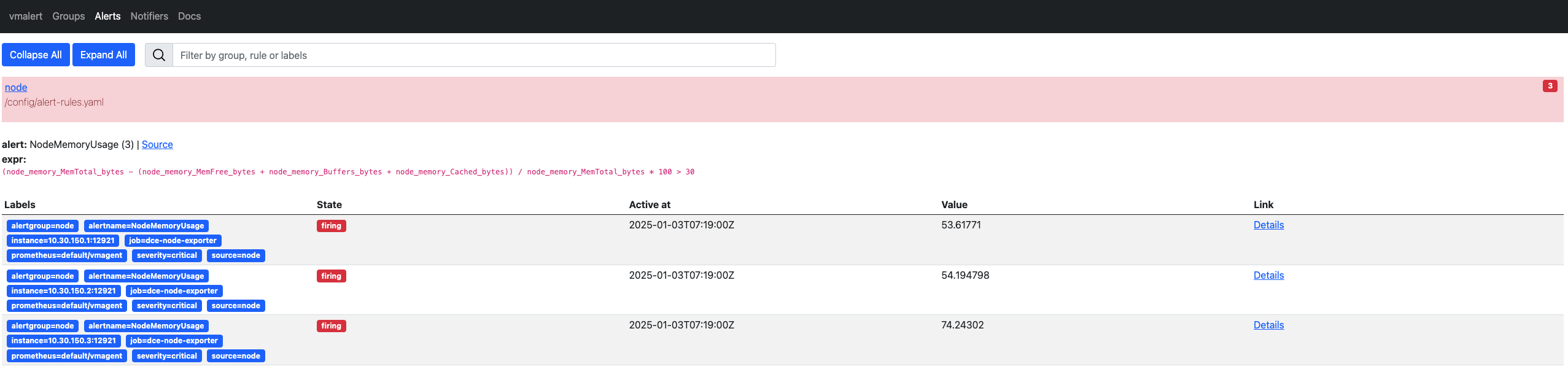

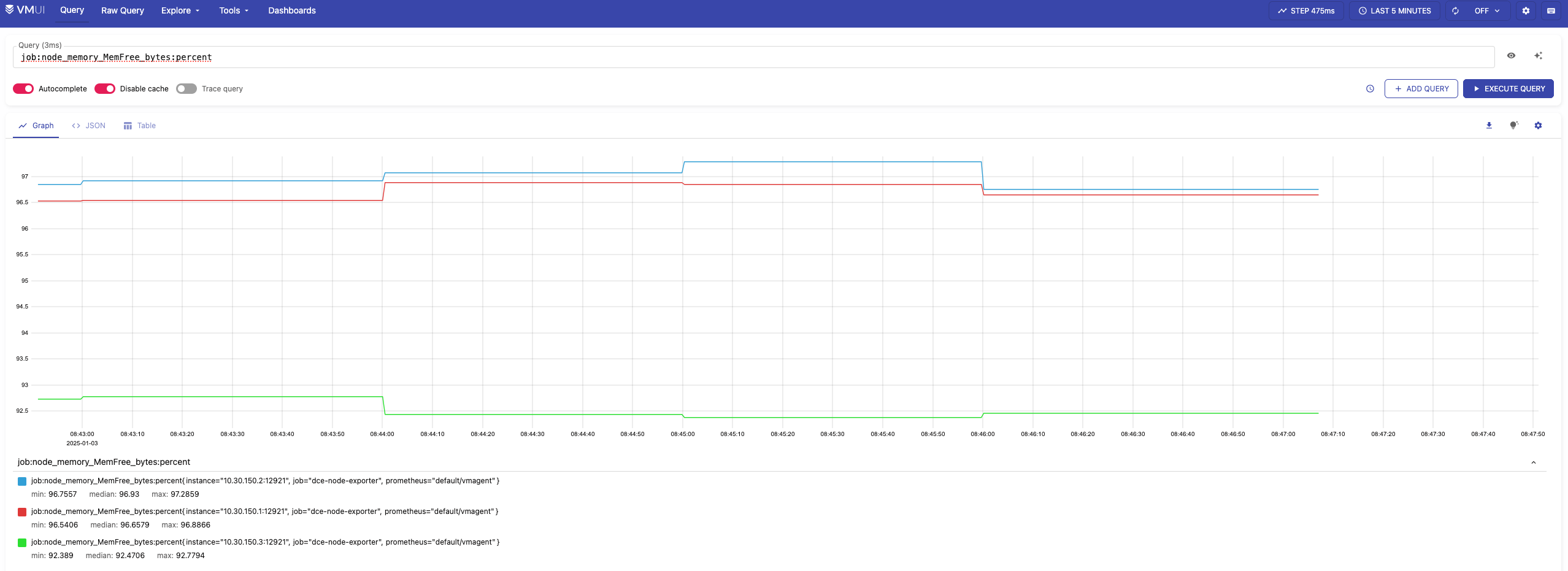

groups: - name: record rules: - record: job:node_memory_MemFree_bytes:percent # 记录规则名称 expr: 100 - (100 * node_memory_MemFree_bytes / node_memory_MemTotal_bytes) - name: node rules: # 具体的报警规则 - alert: NodeMemoryUsage # 报警规则的名称 expr: (node_memory_MemTotal_bytes - (node_memory_MemFree_bytes + node_memory_Buffers_bytes + node_memory_Cached_bytes)) / node_memory_MemTotal_bytes * 100 > 30 for: 1m labels: source: node severity: critical annotations: summary: "Node {{$labels.instance}} High Memory usage detected" description: "{{$labels.instance}}: Memory usage is above 30% (current value is: {{ $value }})"

record规则单独使用,不能与alert规则混合,record规则不需要alert、for、labels和annotations字段

alert页面查看是否正常 kubectl port-forward svc/vma-victoria-metrics-alert-server 8880:8880

查看所有的 Groups

我们添加的记录规则会通过 remote write 传递给 vminsert 保留下来,我们也可以通过 vmselect 查询到

排查过滤某些抓取指标过长导致卡主问题 先去查看vmstorage-vmcluster vmstorage组件的日志,找到那条指标影响了

source_labels: [__meta_kubernetes_pod_label_ruqi_app]