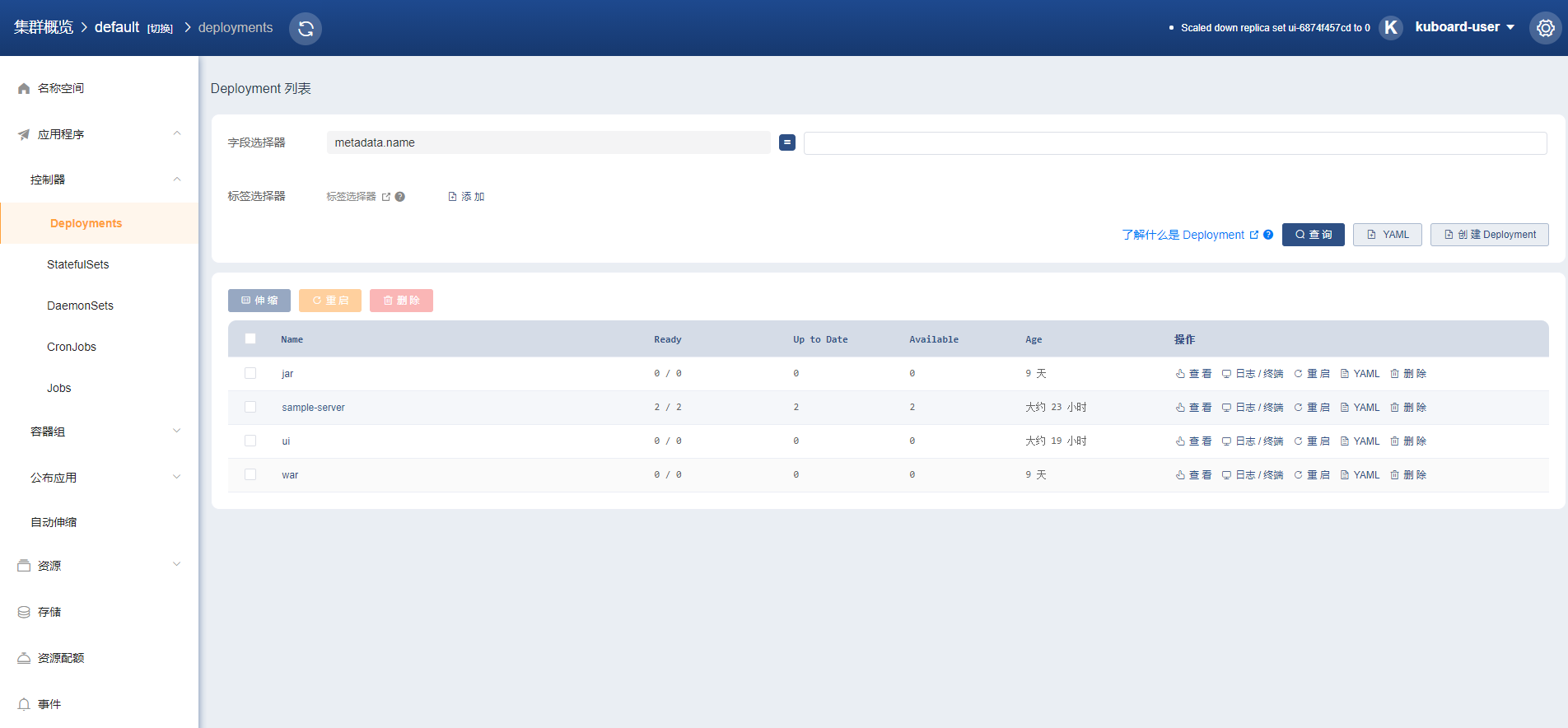

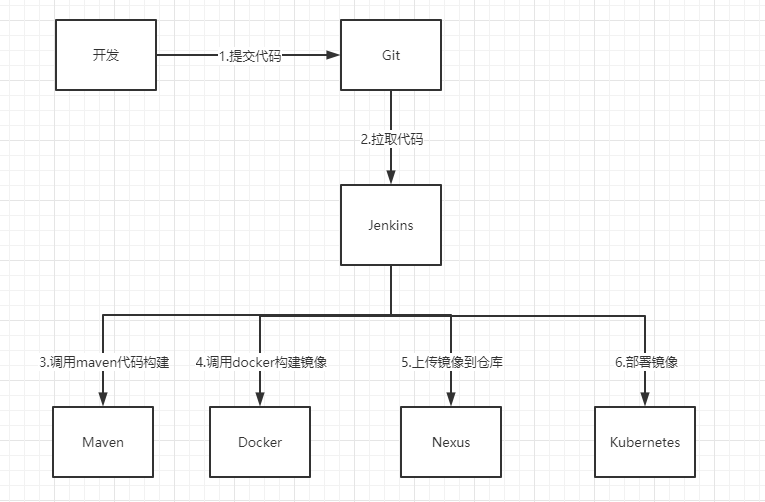

部署流程如下图

Kubernetes 此文档为安装 Kubernetes 单Master节点

配置要求

至少2台2核4G 的服务器

Cent OS 7.6 / 7.7 / 7.8

检查 centos / hostname [root@k8s-node1 ~]# cat /etc/redhat-release CentOS Linux release 7.8.2003 (Core) [root@k8s-node1 ~]# hostname k8s-node1 [root@k8s-node1 ~]# lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 2

修改 hostname 如果您需要修改 hostname,可执行如下指令:

hostnamectl set-hostname your-new-host-name hostnamectl status echo "127.0.0.1 $(hostname) " >> /etc/hosts

检查网络 [root@k8s-node1 ~]# ip route show default via 192.168.229.2 dev ens33 proto static metric 100 192.168.229.0/24 dev ens33 proto kernel scope link src 192.168.229.6 metric 100 [root@k8s-node1 ~]# ip address 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:67:6f:10 brd ff:ff:ff:ff:ff:ff inet 192.168.229.6/24 brd 192.168.229.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever

kubelet使用的IP地址

ip route show 命令中,可以知道机器的默认网卡,通常是 eth0,如 default via 172.21.0.23 dev eth0 ens33ip address 命令中,可显示默认网卡的 IP 地址,Kubernetes 将使用此 IP 地址与集群内的其他节点通信,如 192.168.229.6所有节点上 Kubernetes 所使用的 IP 地址必须可以互通(无需 NAT 映射、无安全组或防火墙隔离)

安装docker及kubelet 确认是否满足以下条件

显示 安装 docker/kubelet 的文档

我的任意节点 centos 版本为 7.6 / 7.7 或 7.8

我的任意节点 CPU 内核数量大于等于 2,且内存大于等于 4G

我的任意节点 hostname 不是 localhost,且不包含下划线、小数点、大写字母

我的任意节点都有固定的内网 IP 地址

我的任意节点都只有一个网卡,如果有特殊目的,我可以在完成 K8S 安装后再增加新的网卡

我的任意节点上 Kubelet使用的 IP 地址 可互通(无需 NAT 映射即可相互访问),且没有防火墙、安全组隔离

我的任意节点不会直接使用 docker run 或 docker-compose 运行容器

使用 root 身份在所有节点执行如下代码,以安装软件:

docker

nfs-utils

kubectl / kubeadm / kubelet

export REGISTRY_MIRROR=https://registry.cn-hangzhou.aliyuncs.comcurl -sSL https://kuboard.cn/install-script/v1.19.x/install_kubelet.sh | sh -s 1.19.2

初始化 master 节点 export MASTER_IP=x.x.x.xexport APISERVER_NAME=apiserver.demoexport POD_SUBNET=10.100.0.1/16echo "${MASTER_IP} ${APISERVER_NAME} " >> /etc/hostscurl -sSL https://kuboard.cn/install-script/v1.19.x/init_master.sh | sh -s 1.19.2

检查 master 初始化结果 [root@k8s-node1 ~]# watch kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE calico-kube-controllers-6c89d944d5-zwcl2 1/1 Running 3 43h 10.100.36.75 k8s-node1 calico-node-6gcj9 1/1 Running 3 43h 192.168.229.6 k8s-node1 calico-node-pgqjz 1/1 Running 5 42h 192.168.229.7 k8s-node2 coredns-59c898cd69-7vnfg 1/1 Running 3 43h 10.100.36.76 k8s-node1 coredns-59c898cd69-fmrjf 1/1 Running 3 43h 10.100.36.77 k8s-node1 etcd-k8s-node1 1/1 Running 3 43h 192.168.229.6 k8s-node1 kube-apiserver-k8s-node1 1/1 Running 3 43h 192.168.229.6 k8s-node1 kube-controller-manager-k8s-node1 1/1 Running 3 43h 192.168.229.6 k8s-node1 kube-proxy-6sfnc 1/1 Running 5 42h 192.168.229.7 k8s-node2 kube-proxy-gpfrv 1/1 Running 3 43h 192.168.229.6 k8s-node1 kube-scheduler-k8s-node1 1/1 Running 3 43h 192.168.229.6 k8s-node1 kuboard-655785f55c-rc52g 1/1 Running 1 23h 10.100.36.78 k8s-node1 metrics-server-7dbf6c4558-5ggbt 1/1 Running 3 21h 192.168.229.7 k8s-node2 [root@k8s-node1 ~]# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-node1 Ready master 43h v1.19.2 192.168.229.6 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.11

初始化 worker节点 获得 join命令参数 在 master 节点上执行

kubeadm token create --print-join-command

可获取kubeadm join 命令及参数,如下所示

kubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

有效时间

该 token 的有效时间为 2 个小时,2小时内,您可以使用此 token 初始化任意数量的 worker 节点。

针对所有的 worker 节点执行

export MASTER_IP=x.x.x.xexport APISERVER_NAME=apiserver.demoecho "${MASTER_IP} ${APISERVER_NAME} " >> /etc/hostskubeadm join apiserver.demo:6443 --token mpfjma.4vjjg8flqihor4vt --discovery-token-ca-cert-hash sha256:6f7a8e40a810323672de5eee6f4d19aa2dbdb38411845a1bf5dd63485c43d303

检查初始化结果 [root@k8s-node1 ~]# kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-node1 Ready master 43h v1.19.2 192.168.229.6 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.11 k8s-node2 Ready <none> 42h v1.19.2 192.168.229.7 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.11 [root@k8s-node1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node1 Ready master 43h v1.19.2 k8s-node2 Ready <none> 42h v1.19.2

安装 Ingress Controller kubectl apply -f https://kuboard.cn/install-script/v1.19.x/nginx-ingress.yaml

安装Kuboard kubectl apply -f https://kuboard.cn/install-script/kuboard.yaml kubectl apply -f https://addons.kuboard.cn/metrics-server/0.3.7/metrics-server.yaml

查看Kuboard运行状态: [root@k8s-node1 ~]# kubectl get pods -l k8s.kuboard.cn/name=kuboard -n kube-system NAME READY STATUS RESTARTS AGE kuboard-655785f55c-rc52g 1/1 Running 1 23h

获取token [root@k8s-master ~]# echo $(kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d) eyJhbGciOiJSUzI1NiIsImtpZCI6IjctcWttYVAycFljWlRoMUhtbE5lQzNueHV1dzhHX2xOUUNOQUpaSFI0TkUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tbTlycXMiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiODdmMjBjOTQtZDZjZi00N2Y3LTg3ZjItNTg3NDc4OTY1ZDE0Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.mMD-OaqLoHrUeHFNNP2LyAS6d5yO1SbbkdPtxFCKFiGG7y5l6A6cuWzE0gqnrZXRXGMJ8nLJqNRmZDgdlyUnlBPtVe2ipWK3SYNy4kVRSguRqEXjhw7H6r1zDkyHRNczkuQQ6eYCAS55t-NRohBE6e4CAE_FiPnDF-AaO-0a0p2cZnqO1vgrkt3y_-9wfs2btmACkbzXJUyfECVWF2LYDsmeQvuiMMQkn_qcPRqh_Y65wBVfXv575NpyJox4KVEcPHcTUxgkMciIlgB6SjDCJI3PrX3GrXilqPx0tSILhvPo-cglwyK33Spvh7b0DWNi-oR3SL3aeME4Khz-ZLiLIQ

使用token登录kuboard http://任意一个Worker节点的IP地址:32567/

GitLab 使用docker下载gitlab镜像 [root@public ~]# docker pull gitlab/gitlab-ce Using default tag: latest latest: Pulling from gitlab/gitlab-ce 001ecc9468da: Pull complete f2b966749869: Pull complete abe474042557: Pull complete e1bf2fb0fbbc: Pull complete 01aa4f31067e: Pull complete 3e98644dd3ba: Pull complete d1964c740a63: Pull complete 67d6f9c7950b: Pull complete 3a47828397ca: Pull complete 00ef60c779e0: Pull complete Digest: sha256:81ff3b05d4da7a8c9b73ec0c4945319932f2ed1d89661831f5a9defe5e408125 Status: Downloaded newer image for gitlab/gitlab-ce:latest docker.io/gitlab/gitlab-ce:latest

检查镜像下载是否成功 [root@public ~]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE gitlab/gitlab-ce latest 85ef0c92d667 3 days ago 1.98GB registry.aliyuncs.com/k8sxio/kube-proxy v1.19.2 d373dd5a8593 11 days ago 118MB eipwork/kuboard latest 58cbda9ae6df 2 weeks ago 182MB eipwork/metrics-server v0.3.7 07c9e703ca2c 5 months ago 55.4MB calico/node v3.13.1 2e5029b93d4a 6 months ago 260MB calico/pod2daemon-flexvol v3.13.1 e8c600448aae 6 months ago 111MB calico/cni v3.13.1 6912ec2cfae6 6 months ago 207MB registry.aliyuncs.com/k8sxio/pause 3.2 80d28bedfe5d 7 months ago 683kB nginx/nginx-ingress 1.5.5 1e674eebb1af 12 months ago 161MB

创建数据存储目录 [root@public ~]# mkdir -p /file/gitlab/data [root@public ~]# mkdir /file/gitlab/logs [root@public ~]# mkdir /file/gitlab/config

启动gitlab [root@public gitlab]# docker run --detach \ > --hostname 192.168.229.8 \ > --publish 8443:443 --publish 9080:80 --publish 2222:22 \ > --name gitlab \ > --restart always \ > --volume /file/gitlab/config:/etc/gitlab \ > --volume /file/gitlab/logs:/var/log/gitlab \ > --volume /file/gitlab/data:/var/opt/gitlab \ > docker.io/gitlab/gitlab-ce:latest ca10c6a70bfc8f55cd43af2471c1d670fc378726375ac4b39b6c9a761d2b5ed1

gitlab如使用单台服务器可使用默认配置,若跟别的服务放一起会出现gitlab占用内存过多问题

vi /file/gitlab/config/gitlab.rb #去掉下面的注释 unicorn['worker_processes'] = 2 #之后执行 docker exec -it gitlab gitlab-ctl reconfigure docker exec -it gitlab gitlab-ctl restart

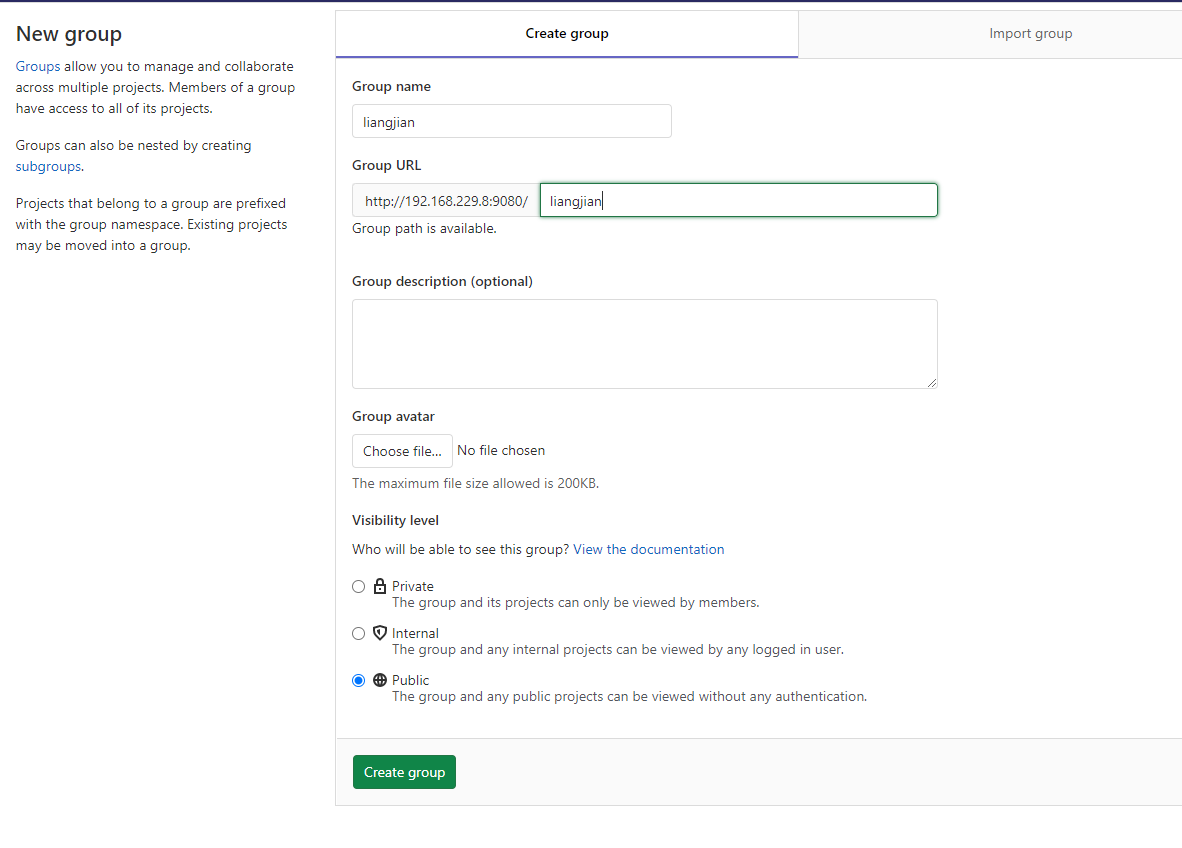

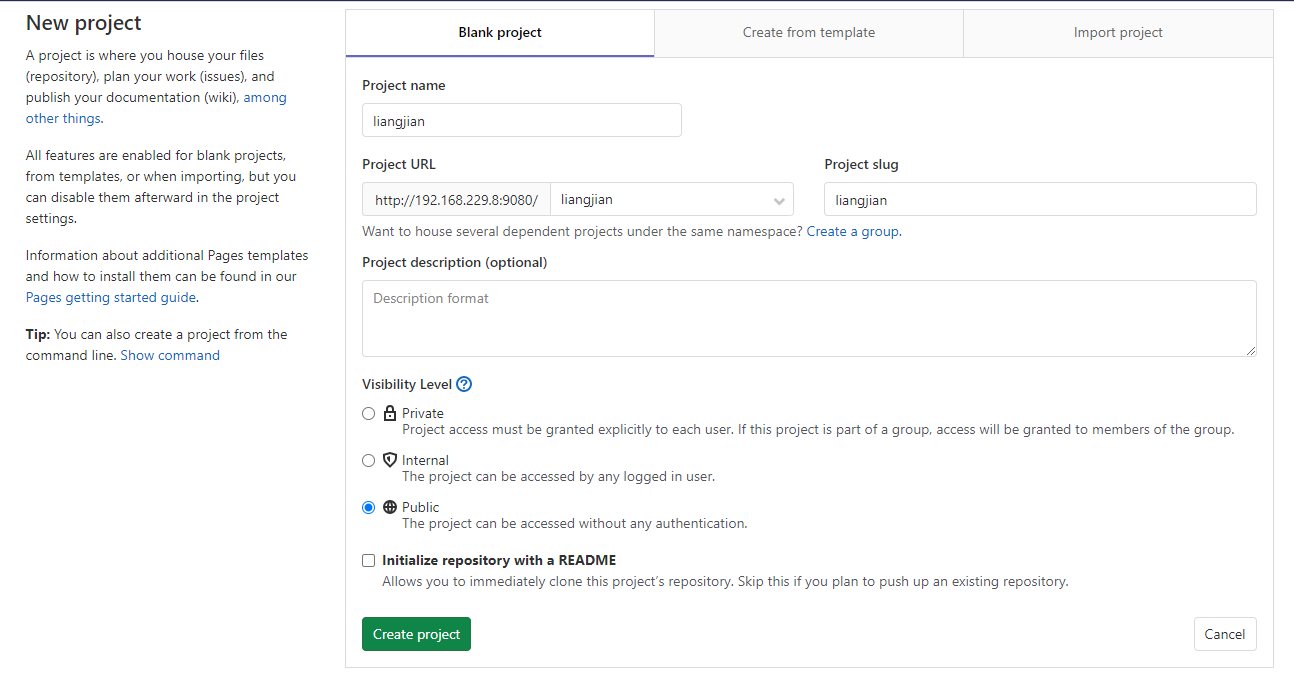

创建项目组 创建项目 配置本地Git环境 git config --global user.name "Administrator" git config --global user.email "admin@example.com"

Jenkins 安装jdk tar zxvf jdk-8u181-linux-x64.tar.gz mv jdk1.8.0_181/ javamv java /usr/local/vi /etc/profile JAVA_HOME=/usr/local/java CLASSPATH=$CLASSPATH :$JAVA_HOME /lib/ PATH=$PATH :$JAVA_HOME /bin source /etc/profilejava -version

安装Jenkins yum的repos中默认是没有Jenkins的,需要先将Jenkins存储库添加到yum repos。

sudo wget -O /etc/yum.repos.d/jenkins.repo https://pkg.jenkins.io/redhat-stable/jenkins.reposudo rpm --import https://pkg.jenkins.io/redhat-stable/jenkins.io.key

yum安装Jenkins

启动jenkins

cd /usr/lib/jenkins/java -jar -Xms512m -Xmx1024m jenkins.war &

配置文件/root/.jenkins/config.xml

在浏览器输入ip:8080进入Jenkins登录页面。

进入登录页面后,Jenkins提示我们需要输入超级管理员密码进行解锁。根据提示,我们可以在/var/lib/jenkins/secrets/initialAdminPassword文件里找到密码。

cat /var/lib/jenkins/secrets/initialAdminPassword

找到密码后,复制密码,粘贴到Jenkins解锁页面,点击Continue继续初始化配置。短暂的等待后,进入插件安装页面。

点击Install suggested plugins,安装默认插件

安装完成后,页面自动进入了管理员账户注册页面

输入信息注册。点击Save and Finish。

点击Start using Jenkins,进入Jenkins主页面。

安装Git yum install curl-devel expat-devel gettext-devel \ openssl-devel zlib-devel yum -y install git-core #查看版本号 git --version

安装Maven #下载maven包,解压 wget https://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz tar zxvf apache-maven-3.6.3-bin.tar.gz mv apache-maven-3.6.3 /usr/local/maven #添加环境变量 vi /etc/profile #MAVEN MAVEN_HOME=/usr/local/maven export MAVEN_HOME export PATH=$PATH:$JAVA_HOME/bin:$MAVEN_HOME/bin #生效环境变量 source /etc/profile #查看版本号 mvn -v

安装NPM #下载node,解压 [root@public home]# wget https://nodejs.org/dist/v12.19.0/node-v12.19.0-linux-x64.tar.xz [root@public home]# tar xf node-v12.19.0-linux-x64.tar.xz [root@public home]# cd node-v12.19.0-linux-x64 #软链接 [root@public node-v12.19.0-linux-x64]# ln -s /home/node-v12.19.0-linux-x64/bin/npm /usr/local/bin/ [root@public node-v12.19.0-linux-x64]# ln -s /home/node-v12.19.0-linux-x64/bin/node /usr/local/bin/ #查看版本号验证 [root@public node-v12.19.0-linux-x64]# node -v v12.19.0 [root@public node-v12.19.0-linux-x64]# npm -v 6.14.8 #安装依赖 [root@public node-v12.19.0-linux-x64]# npm install

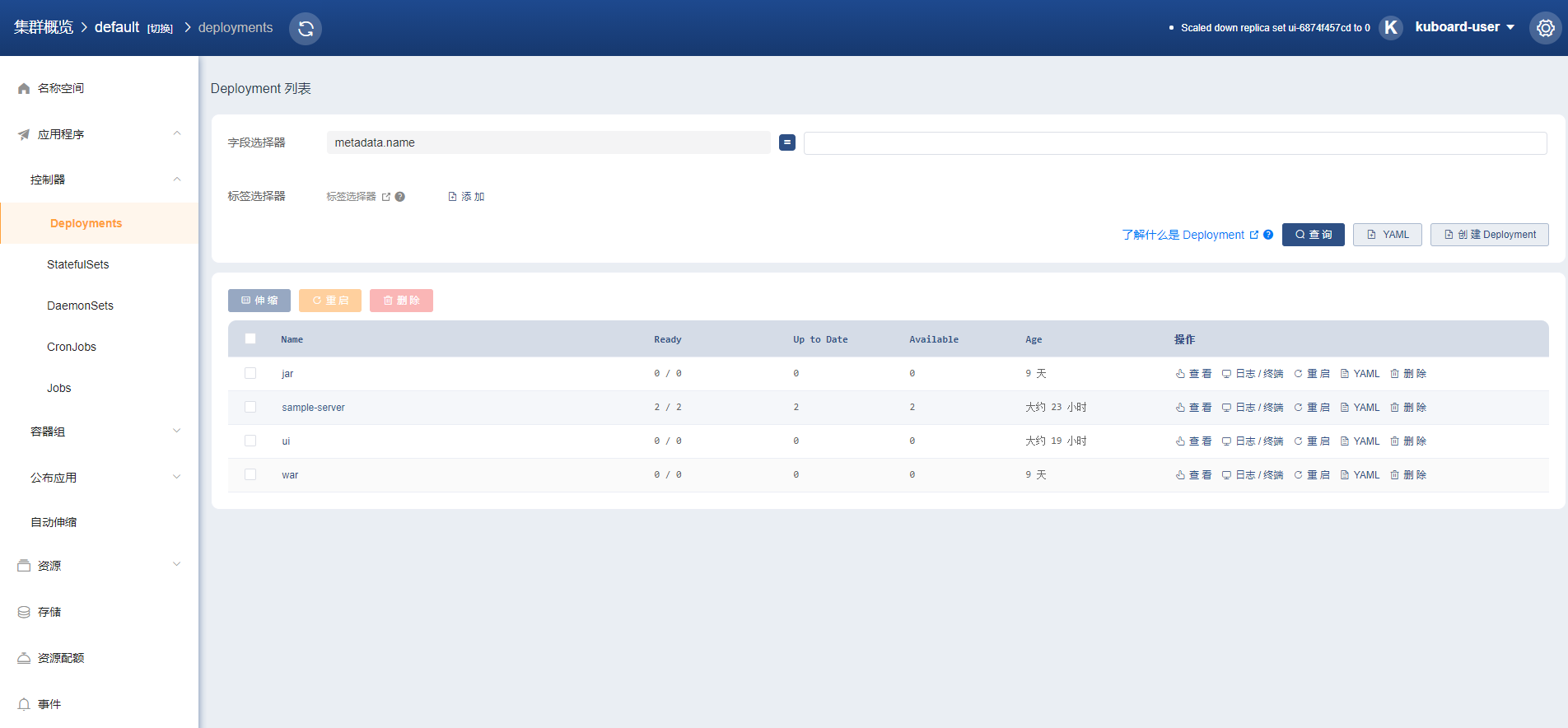

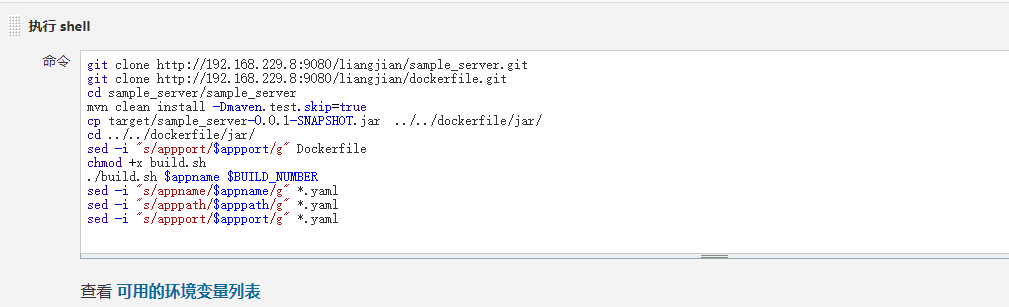

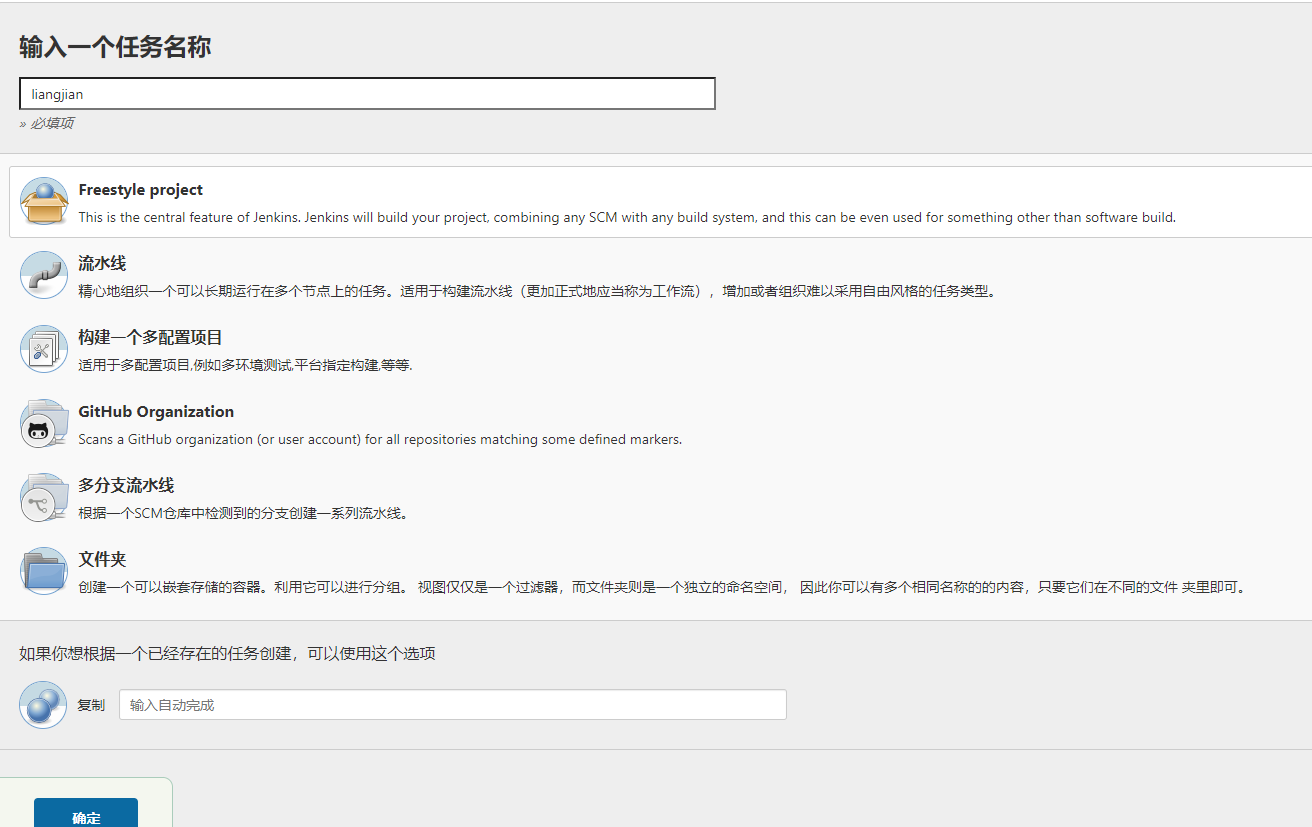

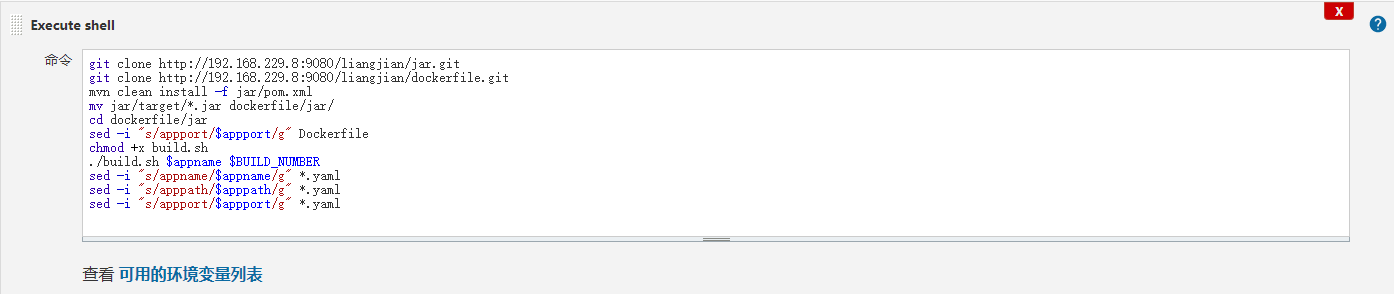

创建构建 配置参数 配置打包构建 通过git clone 拉取代码.mvn clean install进行打包.通过dockerfile上传到镜像仓库

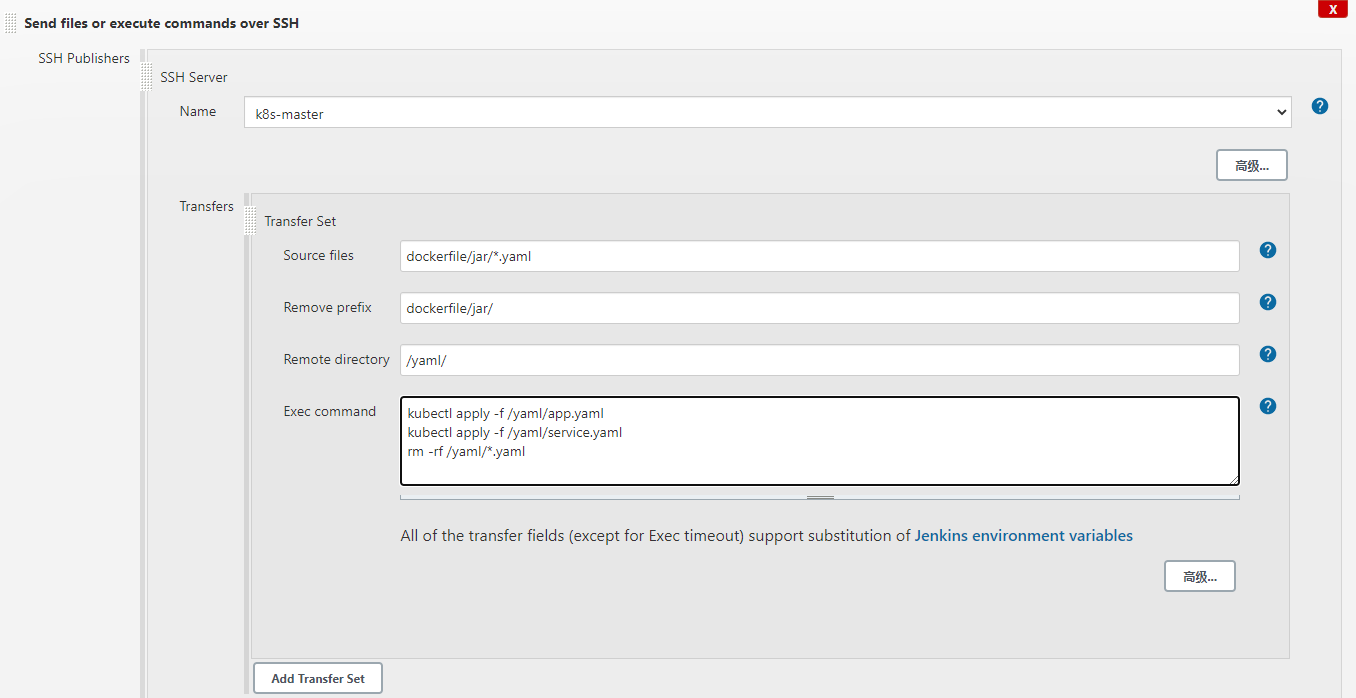

发布到k8s

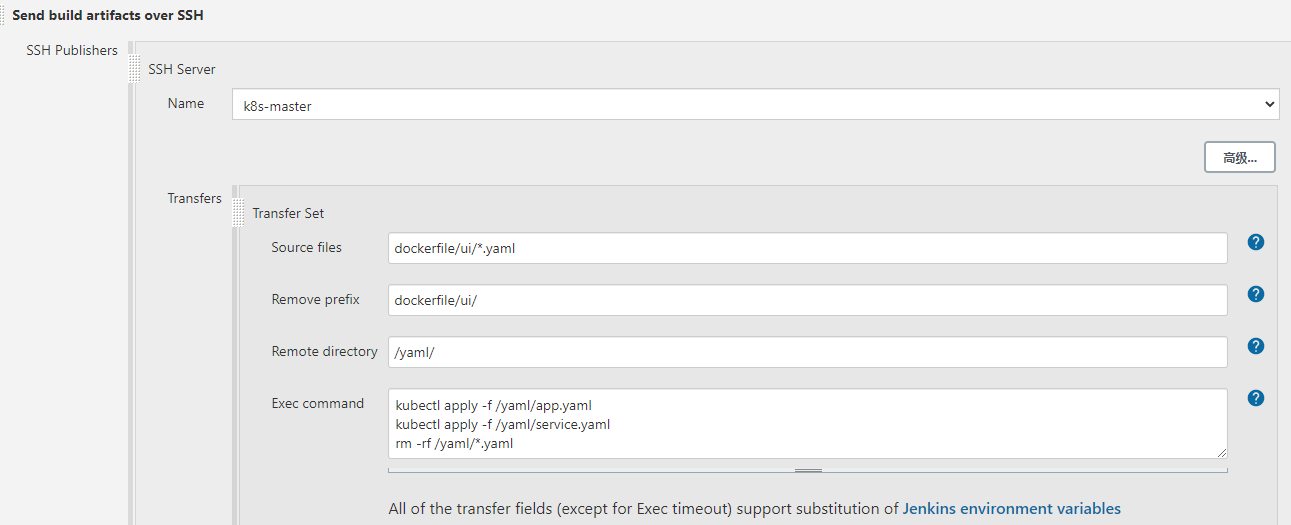

通过ssh插件发送yaml文件到k8s-master

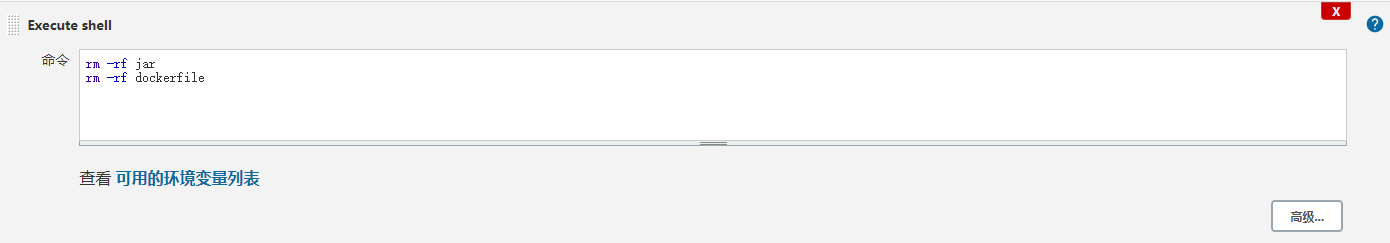

删除打包文件 dockerfile文件 Dockerfile

FROM 192.168.229.8:8551/centos:latest MAINTAINER nmk COPY *.jar /home/App.jar COPY start.sh /home/start.sh RUN chmod +x /home/start.sh ENV JAVA_HOME=/usr/local/java ENV PATH $JAVA_HOME/bin:$PATH ENV CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar ENV LC_ALL="en_US.UTF-8" ENV LANG="en_US.UTF-8" EXPOSE appport CMD ["/home/start.sh"]

build.sh

#!/bin/bash #构建有版本号的镜像,以防需要回滚,而且是从镜像创建服务 docker build -t 192.168.229.8:8551/$1:$2 . -f Dockerfile docker push 192.168.229.8:8551/$1:$2 #构建latest版本,用于自动部署 docker build -t 192.168.229.8:8551/$1:latest . -f Dockerfile docker push 192.168.229.8:8551/$1:latest

start.sh

#!/bin/bash JARFILE=/home/App.jar LOGPATH=/home/logs LOG=/home/logs/app.log if [ ! -d $LOGPATH ];then mkdir -p $LOGPATH fi java -jar -Xms512M -Xmx1024M -Djava.security.egd=file:/dev/./urandom $JARFILE >> $LOG & tail -50f $LOG

yaml文件 app.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app: appname name: appname namespace: default spec: replicas: 2 selector: matchLabels: app: appname strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: labels: app: appname spec: imagePullSecrets: - name: regcred containers: - name: appname image: 192.168.229.8:8551/appname:latest imagePullPolicy: Always livenessProbe: failureThreshold: 3 httpGet: path: apppath port: appport scheme: HTTP initialDelaySeconds: 180 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: apppath port: appport scheme: HTTP initialDelaySeconds: 180 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 resources: limits: cpu: 200m memory: 512Mi requests: cpu: 100m memory: 256Mi

service.yaml

apiVersion: v1 kind: Service metadata: annotations: labels: app: appname name: appname namespace: default spec: ports: - name: appname port: appport protocol: TCP targetPort: appport selector: app: appname sessionAffinity: None type: ClusterIP

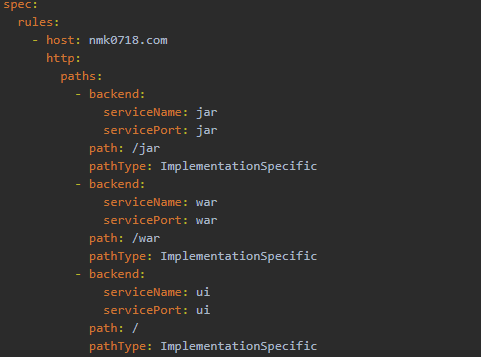

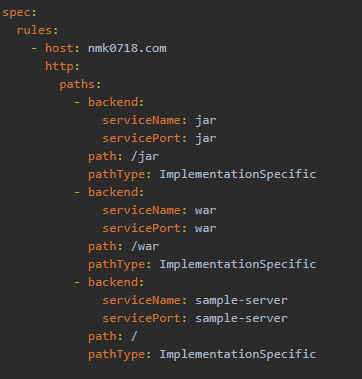

ingress.yaml

apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: labels: app: appname name: appname namespace: default spec: rules: - host: nmk0718.com http: paths: - backend: serviceName: appname servicePort: appname path: /apppath pathType: ImplementationSpecific

*ingress.yaml同域名不同URL创建会覆盖,需手动进行添加。不同域名同URL创建不会覆盖.

打包前端项目

拉取前端代码和dockerfile文件

解压前端依赖的node_modules

使用npm run build打包

压缩build出来的dist文件夹

移动dist压缩包到dockerfile文件夹

授予build.sh执行权限,传递appname和build_number进行打包镜像

替换yaml文件的配置

通过ssh插件发送yaml文件到k8s-master

通过yaml文件创建应用和应用需要的service

删除yaml文件

打包后端项目

拉取后端代码和dockerfile

进入项目目录进行打包构建

复制jar包到dockerfile的文件夹

进入dockerfile文件夹 替换参数到docker和yaml

授予build.sh执行权限 执行build.sh并传递参数

通过ssh插件发送yaml文件到k8s-master

通过yaml文件创建应用和应用需要的service

删除yaml文件

Docker 打包基础镜像供项目使用 拉取centos镜像

[root@public bin]# docker pull centos:7.8.2003 7.8.2003: Pulling from library/centos 9b4ebb48de8d: Already exists Digest: sha256:8540a199ad51c6b7b51492fa9fee27549fd11b3bb913e888ab2ccf77cbb72cc1 Status: Downloaded newer image for centos:7.8.2003 docker.io/library/centos:7.8.2003

查看镜像信息

[root@public bin]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE centos 7.8.2003 afb6fca791e0 4 months ago 203MB

后台运行镜像

docker run -d afb6fca791e0 tail -f /dev/null

查看运行的容器

[root@public bin]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES fc7ac56e28e4 afb6fca791e0 "tail -f /dev/null" 3 seconds ago Up Less than a second determined_nightingale

安装jdk #拷贝压缩包到容器 docker cp jdk-8u181-linux-x64.tar.gz fc7ac56e28e4:/home/ #进入容器 docker exec -it fc7ac56e28e4 /bin/bash tar zxvf jdk-8u181-linux-x64.tar.gz mv jdk-8u181-linux-x64 java mv java /usr/local/ #加入环境变量 vi /etc/profile export JAVA_HOME=/usr/local/java export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib/ export PATH=$PATH:$JAVA_HOME/bin #生效环境变量 source /etc/profile #查看版本号 java -version

打包镜像 #把运行的容器打成镜像 [root@public bin]# docker commit -a "nmk" fc7ac56e28e4 centos:v1.1 sha256:bd61002052705bc6b2c838ddae7fb2e1d4974c7ed32ab6dd0ed205096deba5d5 [root@public bin]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE centos v1.1 bd6100205270 3 seconds ago 203MB centos 7.8.2003 afb6fca791e0 4 months ago 203MB

Nexus 下载nexus https://www.sonatype.com/download-oss-sonatype 选择 Nexus Repository Manager OSS 3.x - Unix tar zxvf nexus-3.27.0-03-unix.tar.gz mv nexus-3.27.0-03 /usr/local/nexus mv sonatype-work/ /usr/local/ vi /etc/profile #nexus export NEXUS_HOME=/usr/local/nexus export PATH=$PATH:$NEXUS_HOME/bin source /etc/profile cd /usr/local/nexus/etc/ vi nexus-default.properties cd /usr/local/nexus/bin nexus start 访问http://192.168.229.8:8081/

创建Docker仓库 在Nexus中Docker仓库被分为了三种

hosted: 托管仓库 ,私有仓库,可以push和pull proxy: 代理和缓存远程仓库 ,只能pull group: 将多个proxy和hosted仓库添加到一个组,只访问一个组地址即可,只能pull

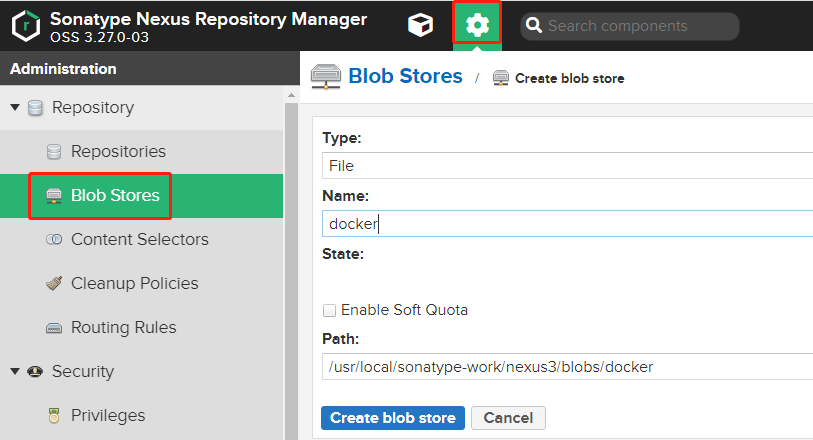

1、配置Blob Stores

点击 管理 ->Repository ->Blob Stores-> Create blob stores

一旦创建了blob store,就不可修改类型和名称。而且,该blob store被仓库或者仓库组使用后,都不可以被删除。一个仓库只可以使用一个Blob Store,一个Blob Store可以对应多个仓库。Blob store的大小为Path对应的文件夹的大小。

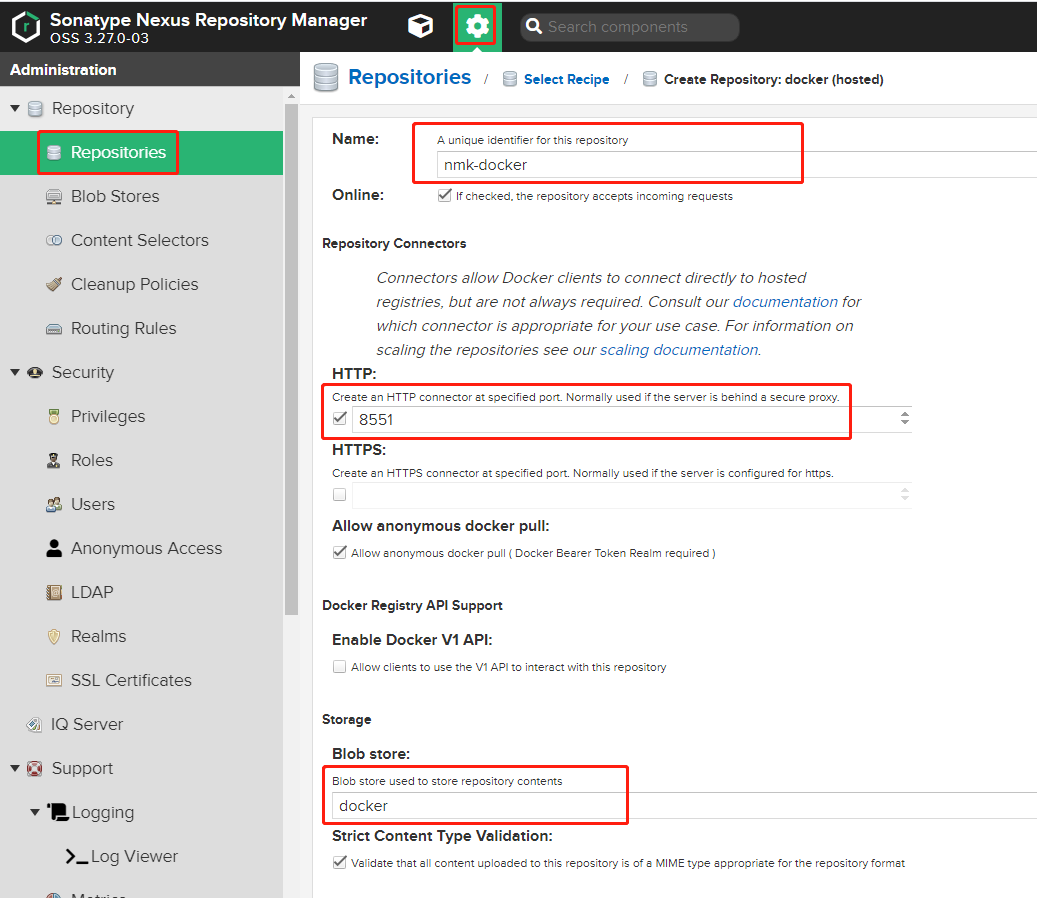

2.创建hosted repository*

点击 管理 -> Repository -> Repositories -> Create Repository -> Docker(hosted)

这样就创建好了一个私有仓库。访问地址即 为192.168.229.8:8551

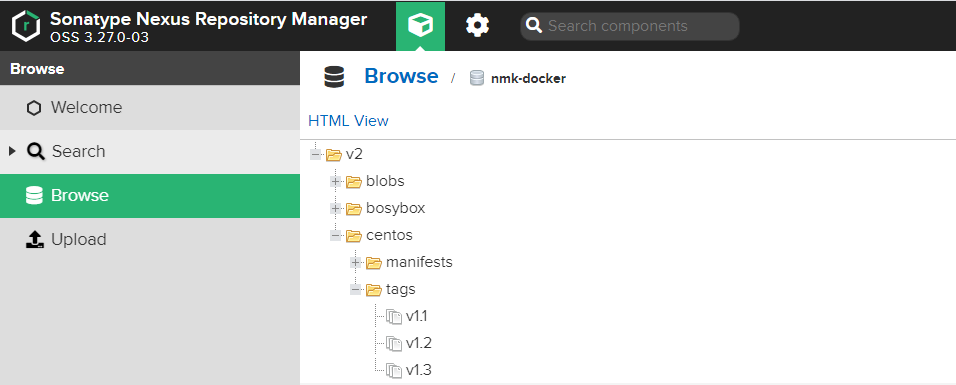

查看私有镜像仓库的列表 [root@public ~]# curl -X GET -u admin:nmk0718 http://192.168.229.8:8551/v2/_catalog {"repositories":["bosybox","centos","nmk0718","nmktest"]}

查看私有镜像仓库的版本 [root@public ~]# curl -X GET -u admin:nmk0718 http://192.168.229.8:8551/v2/centos/tags/list {"name":"centos","tags":["v1.1","v1.2","v1.3"]}

修改docker配置

增加"insecure-registries": ["私服库地址"] 到配置中 [root@k8s-node2 ~]# vi /etc/docker/daemon.json { "registry-mirrors": ["https://registry.cn-hangzhou.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ], "insecure-registries": [ "192.168.229.8:8551" ] } service docker restart

#标记镜像 docker tag cenots:v1.1 192.168.229.8:8551/centos:v1.1 #语法和格式:docker tag <imageId or imageName> <nexus-hostname>:<repository-port>/<image>:<tag>

登录到私服库

docker login -u admin -p nmk0718 192.168.229.8:8551

查看登录凭证

[root@k8s-node2 ~]# cat ~/.docker/config.json { "auths": { "192.168.229.8:8551": { "auth": "YWRtaW46bm1rMDcxOA==" } }, "HttpHeaders": { "User-Agent": "Docker-Client/19.03.11 (linux)" }

用户名密码可通过命令解码为明文

[root@k8s-node2 ~]# echo 'YWRtaW46bm1rMDcxOA==' | base64 --decode admin:nmk0718

后续需优化 :

Docker直接将仓库的用户名密码明文保存在配置文件中非常不安全,除非用户每次在与镜像仓库交互完成之后手动执行docker logout删除,这种明文密码很容易被他人窃取. Docker也考虑到这一点,针对不同的平台,其提供了不同的辅助工具将仓库的登录凭证保存到其他安全系数高的存储中。

上传镜像到私服仓库

docker push 192.168.229.8:8551/centos:v1.1

查看上传的镜像

拉取上传到私服仓库的镜像

[root@public bin]# docker pull 192.168.229.8:8551/centos:v1.1 v1.1: Pulling from centos 9b4ebb48de8d: Already exists 72bf021082cd: Pull complete 7f669da652be: Pull complete b64a50fe3bd5: Pull complete 3e3a9f68a07c: Pull complete Digest: sha256:7c78a495de5556ab53b14c017bc20c1c4ce682d1e9d4c6aefde2c908d65ef80c Status: Downloaded newer image for 192.168.229.8:8551/centos:v1.1 192.168.229.8:8551/centos:v1.1

查看拉取完的镜像信息

[root@public bin]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE 192.168.229.8:8551/centos v1.1 3a26ef36258c 18 hours ago 1.03GB centos 7.8.2003 afb6fca791e0 4 months ago 203MB

Kubernetes从私有镜像拉取镜像 生成密钥

在使用私有镜像拉取镜像时,需要为私有镜像仓库创建一个镜像仓库的密钥,并在创建容器中进行引用。创建镜像仓库的语法和格式:

kubectl create secret docker–registry <regsecret-name> —docker–server=<your–registry–server> —docker–username=<your–name> —docker–password=<your–pword> —docker–email=<your–email>。

:所创建的私有镜像仓库密钥的名称; :为镜像仓库的服务器地址; :登录镜像仓库的用户名; :登录镜像仓库的密码; :用户的邮箱地址。

kubectl create secret docker-registry regcred --docker-server=192.168.229.8:8551 --docker-username=admin --docker-password=nmk0718 --docker-email=nmk0718@163.com

检查 Secret regcred [root@k8s-node1 ~]# kubectl get secret regcred --output=yaml apiVersion: v1 data: .dockerconfigjson: eyJhdXRocyI6eyIxOTIuMTY4LjIyOS44Ojg1NTEiOnsidXNlcm5hbWUiOiJhZG1pbiIsInBhc3N3b3JkIjoibm1rMDcxOCIsImVtYWlsIjoibm1rMDcxOEAxNjMuY29tIiwiYXV0aCI6IllXUnRhVzQ2Ym0xck1EY3hPQT09In19fQ== kind: Secret metadata: creationTimestamp: "2020-09-22T10:38:20Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:data: .: {} f:.dockerconfigjson: {} f:type: {} manager: kubectl-create operation: Update time: "2020-09-22T10:38:20Z" name: regcred namespace: default resourceVersion: "98093" selfLink: /api/v1/namespaces/default/secrets/regcred uid: 4bd46b7a-9f5c-4a73-9651-c1d947bba264 type: kubernetes.io/dockerconfigjson

.dockerconfigjson 字段的值是 Docker 凭据的 base64 表示。

要了解 dockerconfigjson 字段中的内容,请将 Secret 数据转换为可读格式:

[root@k8s-node1 ~]# kubectl get secret regcred --output="jsonpath={.data.\.dockerconfigjson}" | base64 --decode {"auths":{"192.168.229.8:8551":{"username":"admin","password":"nmk0718","email":"nmk0718@163.com","auth":"YWRtaW46bm1rMDcxOA=="}}}

要了解 auth 字段中的内容,请将 base64 编码过的数据转换为可读格式:

[root@k8s-node1 ~]# echo "YWRtaW46bm1rMDcxOA==" | base64 --decode admin:nmk0718

注意,Secret 数据包含与本地 ~/.docker/config.json 文件类似的授权令牌。

这样你就已经成功地将 Docker 凭据设置为集群中的名为 regcred 的 Secret。

定义拉取镜像的部署 创建一个使用你的secret的pod

apiVersion: v1 kind: Pod metadata: name: private-reg spec: containers: - name: private-reg-container image: <your-private-image> imagePullSecrets: - name: regcred

在my-private-reg-pod.yaml 文件中,使用私有仓库的镜像路径替换 <your-private-image>,例如:

192.168.229.8:8551/centos:v1.1

要从私有仓库拉取镜像,Kubernetes 需要凭证。 配置文件中的 imagePullSecrets 字段表明 Kubernetes 应该通过名为 regcred 的 Secret 获取凭证。

创建使用了你的 Secret 的 Pod,并检查它是否正常运行:

kubectl apply -f my-private-reg-pod.yaml [root@k8s-node1 ~]# kubectl get pod private-reg NAME READY STATUS RESTARTS AGE private-reg 1/1 Running 1 20h

kubernetes命令 1、查看指定pod的日志 kubectl logs <pod_name> kubectl logs -f <pod_name> #类似tail -f的方式查看(tail -f 实时查看日志文件 tail -f 日志文件log) 2、查看指定pod中指定容器的日志 kubectl logs <pod_name> -c <container_name> 3、进入容器的pod kubectl exec -ti <your-pod-name> -n <your-namespace> -- /bin/sh kubectl exec -it private-reg -n default /bin/bash 4、显示Pod的更多信息 kubectl get pod <pod-name> -o wide #以yaml格式显示Pod的详细信息 kubectl get pod <pod-name> -o yaml 5、查看所有Pod列表 kubectl get pods #查看rc和service列表 kubectl get rc,service #查看资源详细描述 kubectl describe ${type} ${name} -o wide kubectl describe pod private-reg #查看资源 kubectl get nodes|namespaces|services|pods|rc|deployments|replicasets(rs) -o wide kubectl get nodes -o wide

扩容应用 1)进行扩容

根据场景需要,通过kubectl scale deployment命令将Pod的扩容到4个。

$ kubectl scale deployments my-nexus3 --replicas=4

2)查看扩容后的Pod

在扩容后,通过kubectl get pods能够查看扩容后的Pod数量。

$ kubectl get pods -o wide

升级应用 1)使用kubectl set image命令更新应用镜像版本

当应用发布新版本后,可以

$ kubectl set image deployments/my-nexus3 *=sonatype/nexus3:latest

2)回滚升级到之前的版本

当部署的版本存在问题时,可以通过执行kubectl rollout unduo回滚至之前的版本:

$ kubectl rollout undo deployments/my-nexus3

3)查看部署的回滚状态

回滚状态,可以通过rollout status确认。通过执行kubectl rollout stauts可以查看升级和回归的状态信息:

$ kubectl rollout status deployments/my-nexus3

脚本定时删除worker环境的镜像 docker rmi `docker images | grep "<none>" | awk '{print $3}'`

docker login 出现错误 WARNING! Using –password via the CLI is insecure. Use –password-stdin.https://192.168.229.8:8551/v2/ : http: server gave HTTP response to HTTPS client

在/etc/docker/daemon.json中加入insecure-registries指定私服库地址,重启docker即可

[root@k8s-node2 ~]# vi /etc/docker/daemon.json { "registry-mirrors": ["https://registry.cn-hangzhou.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ], "insecure-registries": [ "192.168.229.8:8551" ] } [root@k8s-node2 ~]# systemctl restart docker

Error response from daemon: login attempt to http://xx.xx.xx.xx:2020/v2/ failed with status: 401 Unauthorized

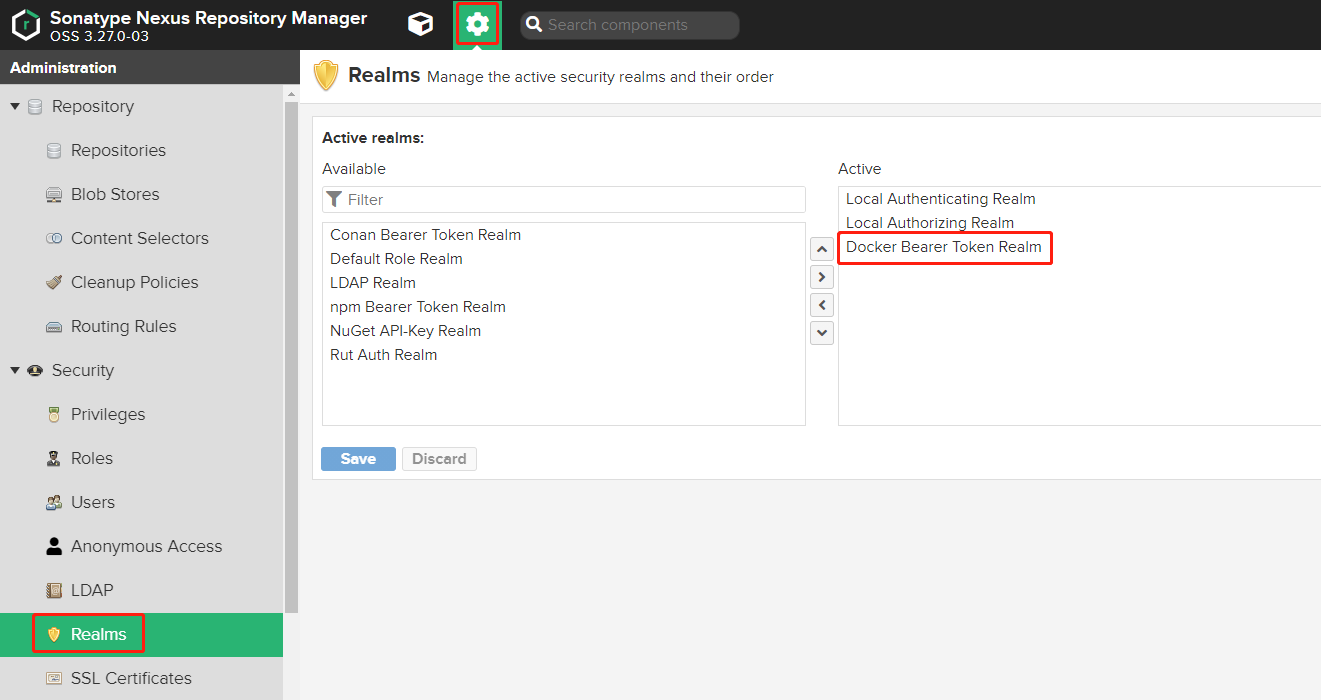

添加Docker Bearer Token Realm到Active即可

Kubernetes pod状态出现ImagePullBackOff的原因 查看这个Pod的状态,发现状态为 ErrImagePull 或者 ImagePullBackOff:

[root@k8s-node1 ~]# kubectl get pods

可以使用describe命令查看这个失败的Pod的明细:

查看 describe 命令的输出中 Events 这部分,我们可以看到如下内容:https://192.168.229.8:8551/v2/ : http: server gave HTTP response to HTTPS client

得知原因为pull的链接为https,更改/etc/docker/daemon.json添加私有库地址重启即可

注意:观察 Pod 状态的时候,镜像缺失和仓库权限不正确是没法区分的。其它情况下,Kubernetes 将报告一个 ErrImagePull 状态。

宿主机映射k8s中的服务 NodePort 我们需要在service的yaml定义中指定nodePort:

kind: Service apiVersion: v1 metadata: name: my-service spec: type: NodePort // 指定service类型 selector: app: forme ports: - port: 8088 // 供集群中其它服务访问的端口 targetPort: 8088 // 后端pod中container暴露的端口 nodePort: 8088 // 节点暴露的端口

验证

http://192.168.50.56:8088/health(宿主机ip+nodePort)

修改NodePort的范围

在 Kubernetes 集群中,NodePort 默认范围是 30000-32767

[root@k8s-master ~]# vi /etc/kubernetes/manifests/kube-apiserver.yaml apiVersion: v1 kind: Pod metadata: annotations: kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.50.53:6443 creationTimestamp: null labels: component: kube-apiserver tier: control-plane name: kube-apiserver namespace: kube-system spec: containers: - command: - kube-apiserver - --advertise-address=192.168.50.53 - --allow-privileged=true - --authorization-mode=Node,RBAC - --client-ca-file=/etc/kubernetes/pki/ca.crt - --enable-admission-plugins=NodeRestriction - --enable-bootstrap-token-auth=true - --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt - --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt - --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key - --etcd-servers=https://127.0.0.1:2379 - --insecure-port=0 - --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt - --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt - --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key - --requestheader-allowed-names=front-proxy-client - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt - --requestheader-extra-headers-prefix=X-Remote-Extra- - --requestheader-group-headers=X-Remote-Group - --requestheader-username-headers=X-Remote-User - --secure-port=6443 - --service-account-key-file=/etc/kubernetes/pki/sa.pub - --service-cluster-ip-range=10.96.0.0/16 - --tls-cert-file=/etc/kubernetes/pki/apiserver.crt - --tls-private-key-file=/etc/kubernetes/pki/apiserver.key image: registry.aliyuncs.com/k8sxio/kube-apiserver:v1.19.2 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 192.168.50.53 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 name: kube-apiserver readinessProbe: failureThreshold: 3 httpGet: host: 192.168.50.53 path: /readyz port: 6443 scheme: HTTPS periodSeconds: 1 timeoutSeconds: 15 resources: requests: cpu: 250m startupProbe: failureThreshold: 24 httpGet: host: 192.168.50.53 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 volumeMounts: - mountPath: /etc/ssl/certs name: ca-certs readOnly: true - mountPath: /etc/pki name: etc-pki readOnly: true - mountPath: /etc/kubernetes/pki name: k8s-certs readOnly: true hostNetwork: true priorityClassName: system-node-critical volumes: - hostPath: path: /etc/ssl/certs type: DirectoryOrCreate name: ca-certs - hostPath: path: /etc/pki type: DirectoryOrCreate name: etc-pki - hostPath: path: /etc/kubernetes/pki type: DirectoryOrCreate name: k8s-certs status: {} 在- --service-cluster-ip-range=10.96.0.0/16下面加入以下配置 - --service-node-port-range=7000-10000

重启apiserver

# 获得 apiserver 的 pod 名字 export apiserver_pods=$(kubectl get pods --selector=component=kube-apiserver -n kube-system --output=jsonpath={.items..metadata.name}) # 删除 apiserver 的 pod kubectl delete pod $apiserver_pods -n kube-system

执行以下命令,验证修改是否生效:

kubectl describe pod $apiserver_pods -n kube-system

HostNetwork 更改app.yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app: ymall name: ymall namespace: default spec: replicas: 1 selector: matchLabels: app: ymall strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: labels: app: ymall spec: hostNetwork: true imagePullSecrets: - name: regcred containers: - name: ymall image: 192.168.50.52:8551/ymall:latest imagePullPolicy: Always ports: - containerPort: 8080 resources: limits: cpu: 800m memory: 1224Mi requests: cpu: 100m memory: 512Mi

主要参数:hostNetwork: true,containerPort: 8080

使用host网络模式,使容器使用宿主机ip就无需再进行创建service.yaml

创建即可获得宿主机ip的容器

kubectl apply -f app.yaml [root@k8s-master ~]# kubectl get po --all-namespaces -o wide --show-labels |grep ymall default ymall-754946fd54-dwwjp 1/1 Running 0 37s 192.168.50.54 k8s-work1 <none> <none> app=ymall,pod-template-hash=754946fd54

容器ip已经变更为宿主机ip

挂载容器目录到宿主机目录 更改yaml

apiVersion: apps/v1 kind: Deployment metadata: labels: app: appname name: appname namespace: default spec: replicas: 1 selector: matchLabels: app: appname strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: labels: app: appname spec: hostNetwork: true imagePullSecrets: - name: regcred containers: - name: appname image: 192.168.50.52:8551/appname:latest imagePullPolicy: Always volumeMounts: - name: appname mountPath: /home/www/appCenterTest/public ports: - containerPort: 8081 resources: limits: cpu: 800m memory: 1224Mi requests: cpu: 100m memory: 512Mi volumes: - name: appname hostPath: path: /data type: Directory

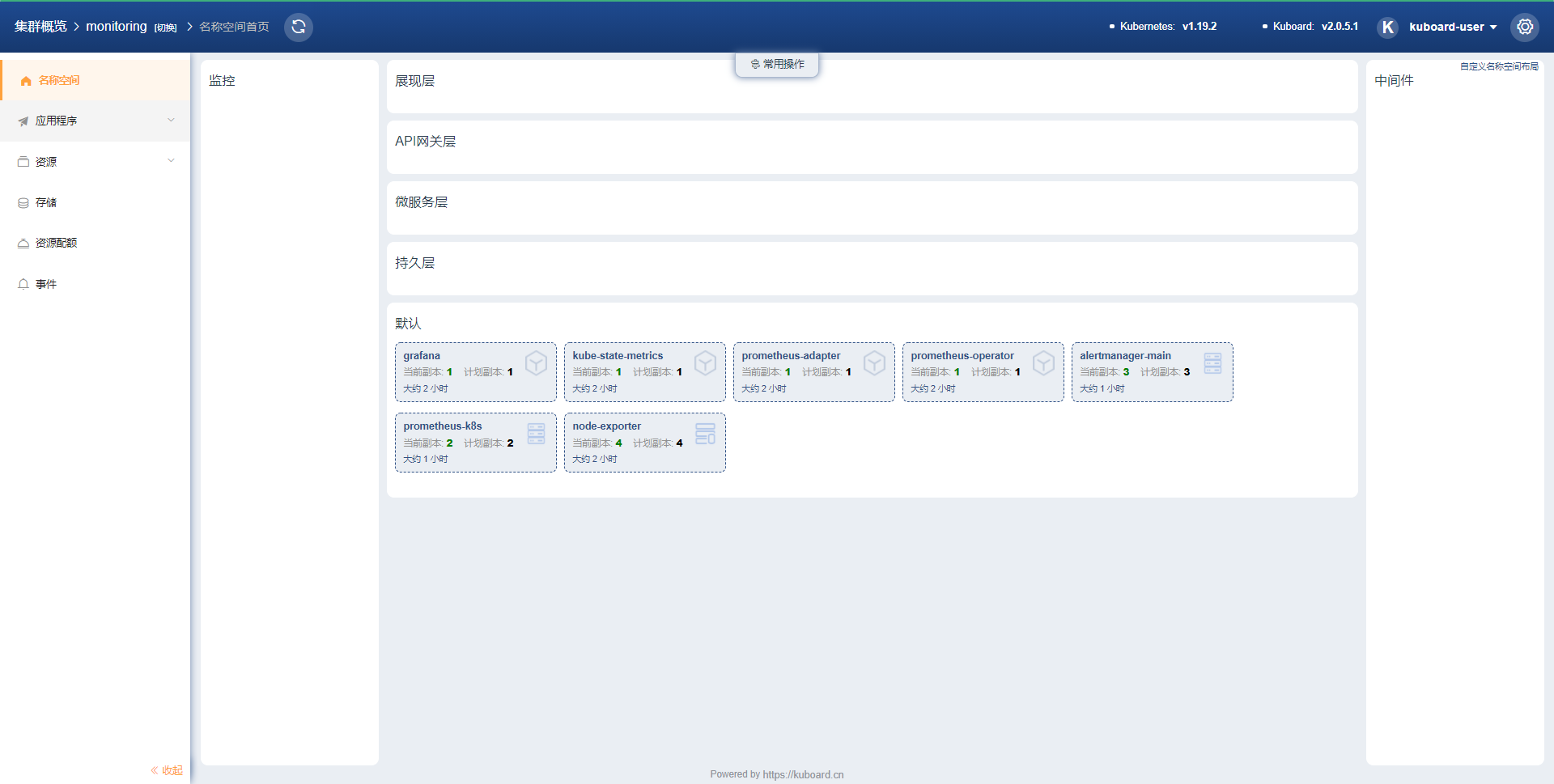

Prometheus [root@k8s-master ~]# wget https://github.com/prometheus-operator/kube-prometheus/archive/v0.6.0.tar.gz [root@k8s-master ~]# tar zxvf kube-prometheus-0.6.0.tar.gz [root@k8s-master ~]# cd kube-prometheus-0.6.0 [root@k8s-master ~]# kubectl create -f manifests/setup [root@k8s-master ~]# until kubectl get servicemonitors --all-namespaces ; do date; sleep 1; echo “”; done [root@k8s-master ~]# kubectl create -f manifests/ [root@k8s-master ~]# kubectl get pod -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-main-0 2/2 Running 0 74m alertmanager-main-1 2/2 Running 0 74m alertmanager-main-2 2/2 Running 0 74m grafana-7c9bc466d8-r566p 1/1 Running 0 88m kube-state-metrics-66b65b78bc-mhgxq 3/3 Running 0 88m node-exporter-2764r 2/2 Running 0 88m node-exporter-92pd7 2/2 Running 0 88m node-exporter-mkj2p 2/2 Running 0 88m node-exporter-mxrd9 2/2 Running 0 88m prometheus-adapter-557648f58c-j4jcz 1/1 Running 0 88m prometheus-k8s-0 3/3 Running 1 74m prometheus-k8s-1 3/3 Running 0 74m prometheus-operator-5b7946f4d6-jqwbb 2/2 Running 0 88m #因端口问题,需手动修改prometheus alertmanager grafana的Nodeport,加入nodePort: 8001和type: NodePort即可 示例 --- apiVersion: v1 kind: Service metadata: creationTimestamp: '2020-12-05T09:37:14Z' labels: app: grafana managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: 'f:metadata': {} 'f:spec': 'f:ports': {} manager: kubectl-create operation: Update time: '2020-12-05T09:37:14Z' name: grafana namespace: monitoring resourceVersion: '9637020' selfLink: /api/v1/namespaces/monitoring/services/grafana uid: 6e6edd10-68b4-43b6-93ba-9b72e280d411 spec: clusterIP: 10.96.98.51 ports: - name: http port: 3000 protocol: TCP targetPort: http selector: app: grafana sessionAffinity: None type: ClusterIP 改为 spec: clusterIP: 10.96.98.51 ports: - name: http nodePort: 8001 port: 3000 protocol: TCP targetPort: http selector: app: grafana sessionAffinity: None type: NodePort

等待全部启动完毕即可

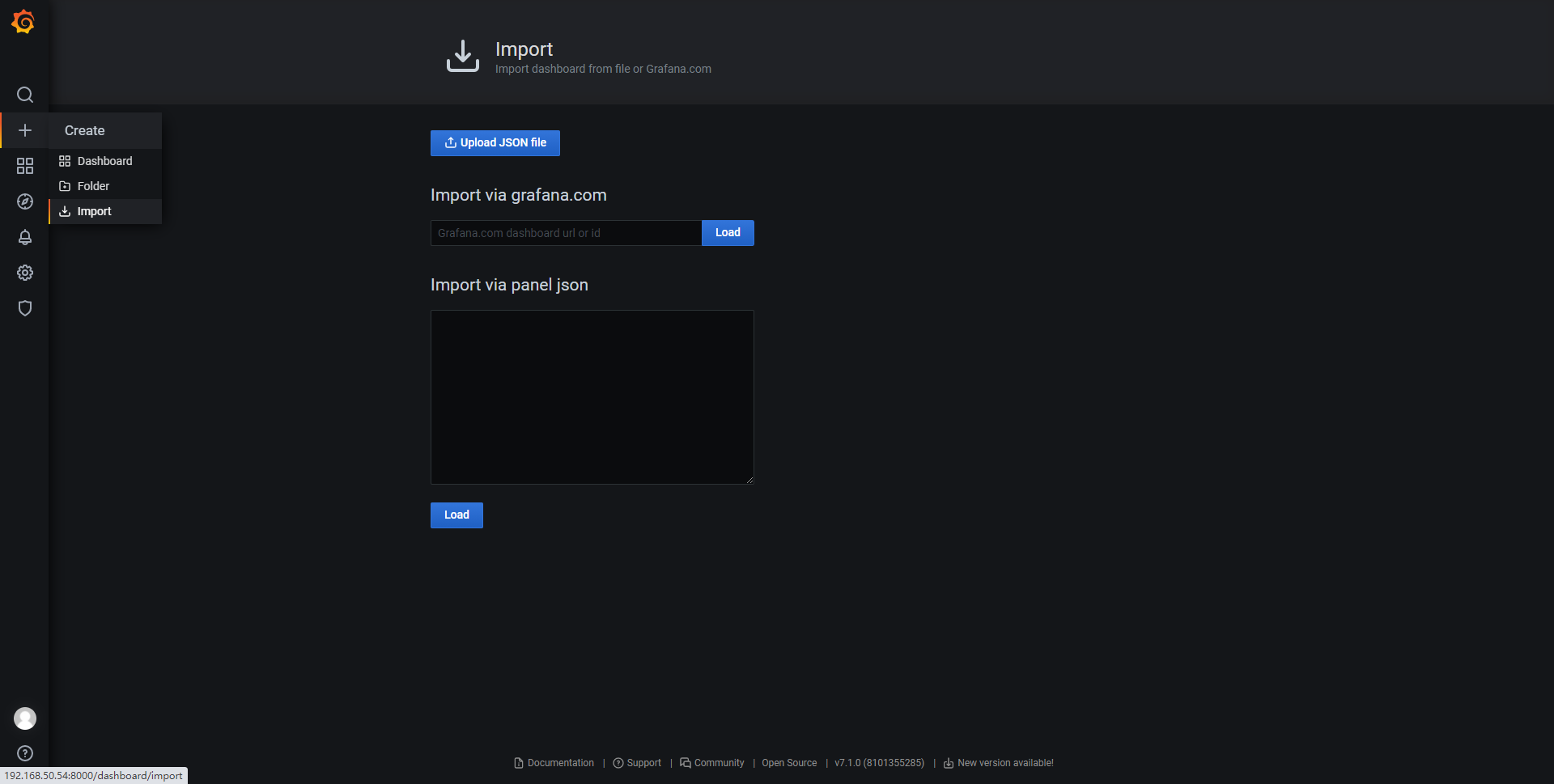

导入监控模板

完成

通过ssh插件发送yaml文件到k8s-master

通过ssh插件发送yaml文件到k8s-master